Yes, colleges actively check for AI in application essays using sophisticated detection tools and human review processes. According to a survey by Intelligent, admissions offices projected that their AI usage would climb from 50 percent in 2023 to 80 percent in 2024, with 2025 numbers expected to exceed these projections. The Common App explicitly defines submitting AI-generated content as your own work as potential fraud, making this a critical admissions concern.

The detection landscape has evolved rapidly. A survey conducted in early 2023 by the National Association for College Admission Counseling found that approximately 28% of four-year colleges reported using some form of AI detection tool in their admissions process. By mid-2023, that number had increased to nearly 40%.

Do Colleges Actually Check for AI in Application Essays?

Most selective colleges combine human review with AI detection software to identify potentially artificial content. Admissions officers examine style inconsistencies, generic language patterns, and unnatural flow, suggesting AI assistance. Detection systems are increasingly sophisticated but not foolproof.

The detection process involves multiple layers. The same survey found that 70 percent of admissions officers saw AI being used to review recommendation letters and transcripts, with 60 percent saying that it was used to review personal essays, according to research published in The Nation.

Primary Detection Methods Used:

- Turnitin’s AI Writing Detection capabilities

- GPTZero automated scanning systems

- Human review analysing style consistency

- Cross-referencing with academic transcripts

- Follow-up interview questions about essay content

According to a recent release from the company, about 11% of those papers indicated at least 20% AI writing present, while 3% indicated more than 80%, based on Best Colleges’ analysis of Turnitin data.

Yale and Caltech have published explicit AI policies for their application cycles, signalling institutional commitment to detection protocols.

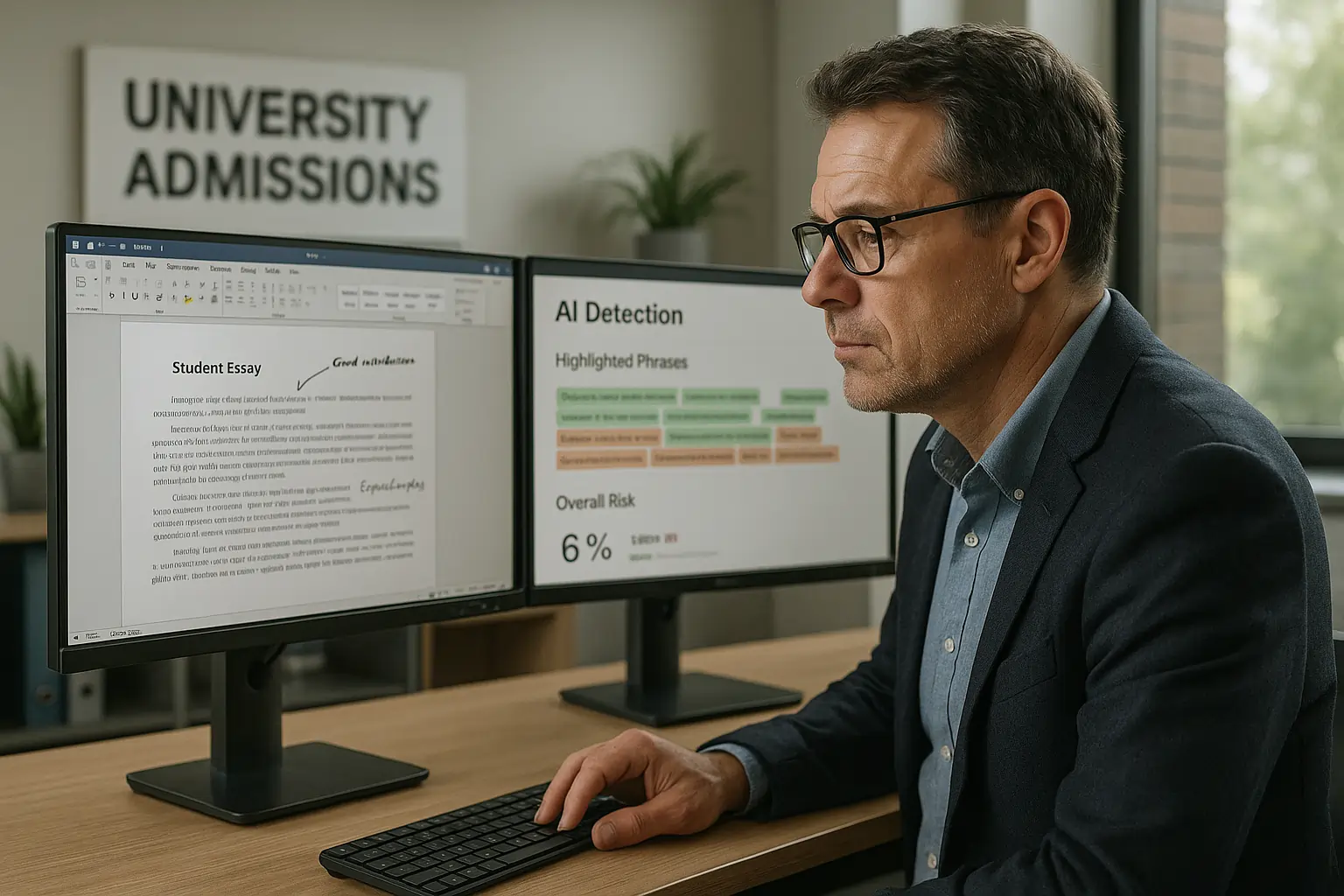

For context on how AI detection works, these systems analyse multiple linguistic patterns simultaneously.

What Counts as AI Fraud or Misuse in College Applications?

Using AI to generate substantial content for essays violates most college policies and Common App fraud rules. The boundary extends beyond simple copy-pasting to include AI-generated ideas, structure, or significant content that misrepresents authentic voice and capabilities.

The Common App explicitly defines submitting AI-generated content as your own work as potential fraud. Yale’s policy states that submitting “substantive content or output” from AI systems constitutes application fraud. Brown University prohibits AI use entirely in application materials.

Fraud Investigation Triggers:

- AI-written essay drafts, even with human editing

- AI-generated story ideas or fabricated personal anecdotes

- Substantial AI assistance in structure or argumentation

- Using AI to fabricate experiences that never occurred

- Submitting AI content without the required disclosure

Gray Areas That Create Problems:

- “Brainstorming assistance” that influences the final content

- Grammar checking with AI suggestions alters the meaning

- AI “enhancement” of real experiences beyond recognition

Research Finding: One admissions counselor quoted in educational research noted, “Real teenagers don’t write like optimized marketing copy. When I see perfect topic sentences and flawless transitions, alarm bells go off.”

The artificial intelligence detection process has become sophisticated enough to identify subtle patterns most students consider undetectable.

How Reliable Are AI Detectors for College Essays?

AI detectors show mixed reliability with frequent false positives affecting legitimate student work. OpenAI discontinued its own AI text classifier in July 2023 due to accuracy concerns. Even Turnitin acknowledges significant false positive risks in its detection capabilities.

Detection accuracy varies significantly across tools and contexts. Akram (2023) also tested the accuracy of six AI-detection tools (GPTZero, GPTkit, Originality, Writer, Sapling, and Zylalab) and found that they showed inconsistent results, with their accuracy ranging from 55.29% to 97%, according to ResearchGate analysis.

Detector Reliability Comparison:

| Detection Tool | Claimed Accuracy | False Positive Risk | Primary Limitations |

|---|---|---|---|

| Turnitin AI | 85%+ | High for ELL students | Style inconsistency flags |

| GPTZero | 99% accuracy rates across diverse datasets | false positive rate is just under 1% | Formal writing triggers |

| Originality.ai | 82-90% | Moderate to High | Length-dependent accuracy |

| Human Review | Variable | Low but subjective | Experience-dependent |

Why Detectors Struggle:

- AI models trained primarily on adult professional writing

- Difficulty distinguishing polished student work from AI content

- Bias against non-native English sentence patterns

- Evolving AI capabilities outpacing detection technology

One scholar in a Wired article noted that even a 1% rate of false positives is inexcusable, because for every 1,000 essays, that’s 10 students who could be accused of an academic theft they didn’t commit, as reported by CalMatters.

University of Wisconsin-Madison research found that AI detection accuracy drops significantly when students make minor edits to AI-generated content, creating an escalating detection arms race.

Which Colleges Allow Limited AI Assistance?

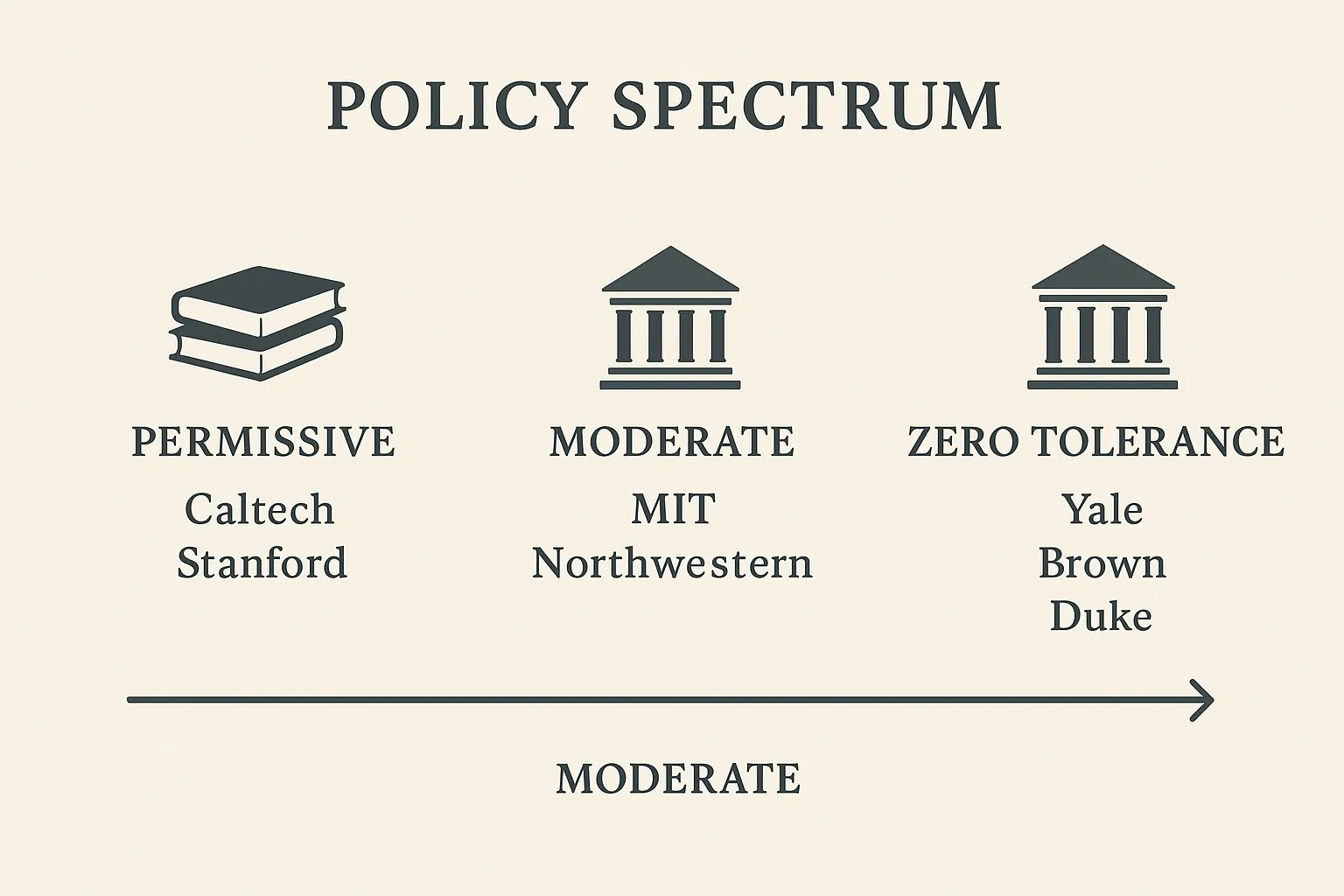

AI policies vary dramatically between institutions. Some permits offer brainstorming assistance, while others prohibit any AI use. Caltech provides ethical AI guidelines allowing limited use for idea generation and grammar checking while explicitly prohibiting AI-drafted content. Most selective colleges maintain restrictive approaches.

Permissive Policy Examples:

- Caltech: Brainstorming and grammar assistance allowed with disclosure requirements

- Some state institutions: Grammar tools like Grammarly are generally accepted for basic corrections

- Community colleges: Often less restrictive, focusing on plagiarism rather than AI detection

Restrictive Policy Examples:

- Yale: AI-generated substantial content equals fraud, zero tolerance

- Brown: Complete prohibition on AI in all application materials

- Most Ivy League schools: Zero tolerance approaches with strict consequences

The Disclosure Challenge: Schools allowing limited AI use often require disclosure, but students worry this creates evaluation bias. Caltech’s approach asks applicants to follow ethical guidelines without necessarily flagging every Grammarly correction.

According to college counselling professionals, the safest approach remains avoiding AI entirely for application essays. One counselor stated, “When in doubt, write it out yourself.”

For students concerned about college admissions requirements, authenticity has become more valuable than perfect prose.

What Happens If Your Essay Gets Flagged for AI?

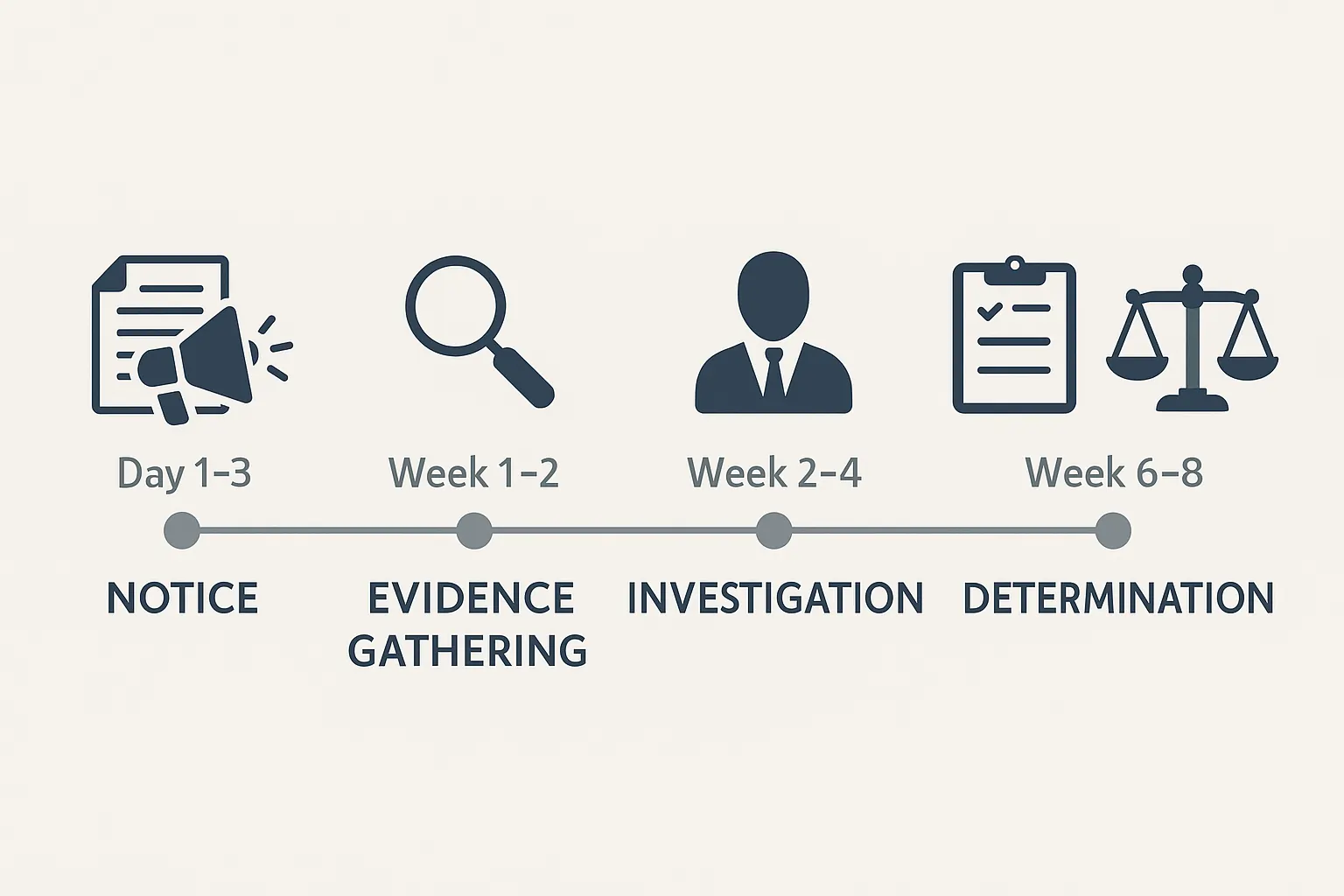

Don’t panic if your authentic essay gets flagged. Document your writing process, gather authorship evidence, and respond through official channels. The Common App has established fraud procedures involving notice, investigation, and determination phases with appeal opportunities. Most false positives resolve when students provide proper documentation.

Immediate Response Protocol:

- Gather Evidence: Save all drafts, timestamps, revision history, and teacher feedback

- Document Process: Email chains with counselors, brainstorming notes, and research sources

- Request Clarification: Ask specifically what triggered the flag through official channels

- Prepare Writing Sample: Be ready to write similar content under supervision if requested

- Stay Professional: Avoid defensive language; stick to factual responses

Schools Look For:

- Consistent writing style across multiple documents

- Evidence of an authentic revision process

- Ability to discuss essay content in detail during interviews

- Writing samples matching flagged essay quality and voice

Timeline Expectations:

- Initial flag notification: 1-2 weeks after submission

- Investigation period: 2-4 weeks for thorough review

- Appeal window: Usually 10-14 days after determination

- Final resolution: Can extend past regular decision deadlines

Case Study: A student’s legitimate essay on coding was flagged due to technical language being perceived as “too advanced.” She provided GitHub commits, project documentation, and completed a supervised writing session. The flag was removed within one week.

Authentication success depends on having a documented writing trail. Students who can demonstrate their work rarely face permanent consequences for false positives.

How to Use AI Ethically in College Applications

If target schools allow limited AI assistance, restrict usage to idea generation and basic grammar checking while maintaining authentic voice and experiences. Never use AI to create substantial content, fabricate experiences, or fundamentally alter writing style. Always follow specific disclosure requirements from individual institutions.

Ethical AI Boundaries:

- Acceptable: Topic idea brainstorming, basic grammar checking

- Gray Area: Sentence restructuring, vocabulary enhancement suggestions

- Prohibited: Content generation, experience fabrication, voice replacement

Authentication Best Practices:

- Keep detailed revision histories with timestamps

- Save all drafts demonstrating writing evolution

- Document brainstorming and research processes

- Maintain a consistent writing style across all applications

- Prepare to explain every sentence in potential interviews

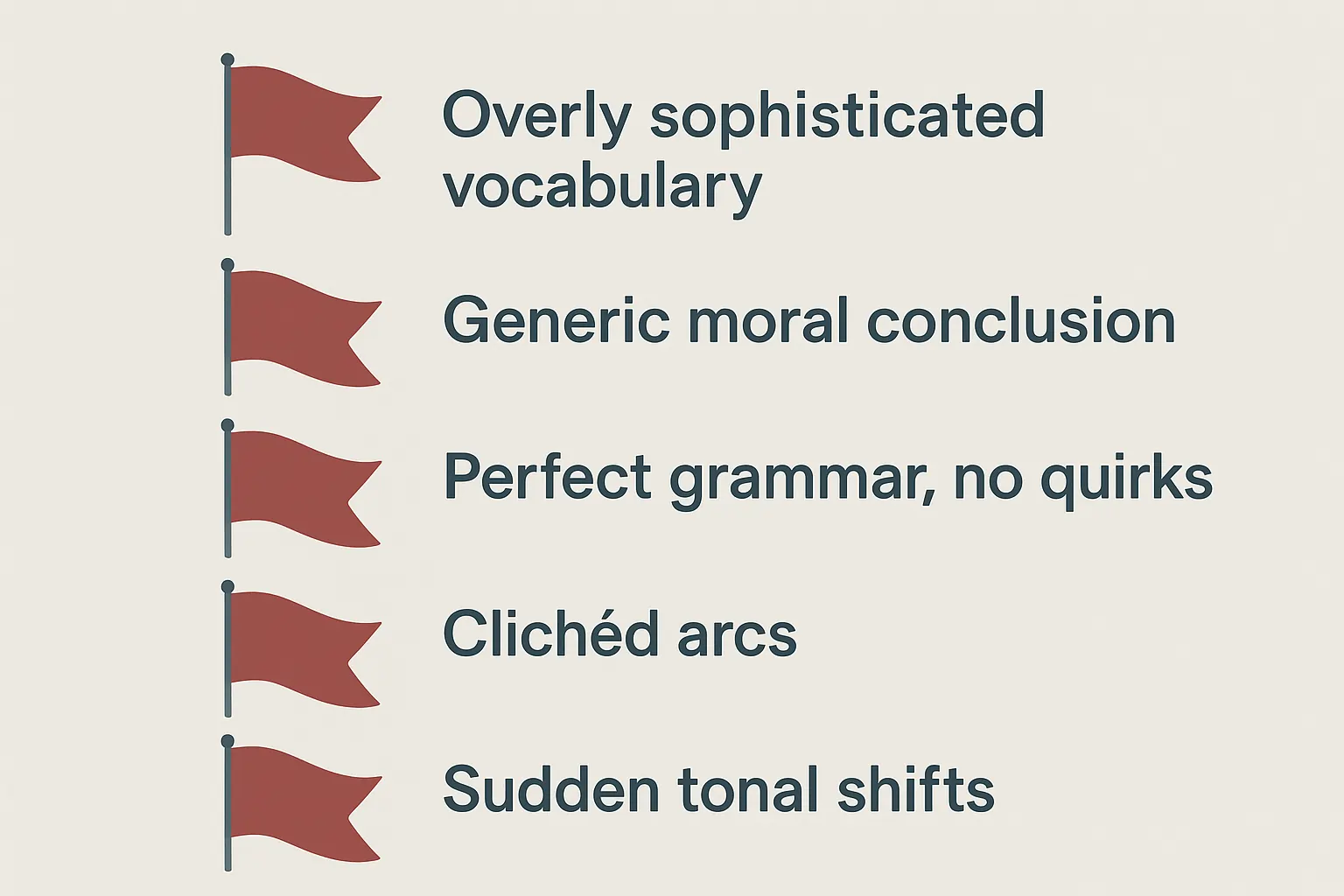

Warning Signs to Avoid:

- Sudden improvement in writing quality is inconsistent with the academic record

- Vocabulary that doesn’t match previous academic writing

- Generic “AI-ish” phrases and transitions

- Perfect grammar if transcripts show writing struggles

- Essays resembling marketing copy rather than student voice

The Authenticity Test: Read essays aloud. If friends wouldn’t recognize your personality in the writing, you’ve crossed ethical boundaries.

For understanding broader AI tools capabilities, recognizing when assistance becomes replacement helps maintain ethical boundaries.

One admissions counselor shared this verification method: “If a student can’t explain their essay’s main metaphor or discuss experiences in detail during interviews, that’s our confirmation of inauthentic content.”

When in the Admissions Cycle Do AI Checks Happen?

AI detection occurs primarily during initial review phases, typically 2-4 weeks after application submission. The Common App cycle refreshes annually on 1 August, with new AI policies often announced alongside updated essay prompts. Early Decision and Early Action applications receive identical scrutiny to Regular Decision submissions.

Detection Timeline:

- Submission Phase: Essays uploaded to application platforms

- Initial Screening: 1-2 weeks for automated detector scans

- Human Review Phase: 2-4 weeks for admissions officer evaluation

- Flag Investigation: Additional 1-3 weeks if concerns arise

- Final Decision: Can extend past expected notification dates

Peak Detection Periods:

- Early Decision deadline crunch (November-December)

- Regular Decision application rush (January-February)

- Waitlist review periods (April-May)

System Limitations: Applications submitted during peak periods may receive less thorough AI screening due to volume constraints. However, detection likelihood remains high, potentially with delayed identification.

Additional Screening: Schools conduct spot checks on admitted students, particularly scholarship candidates or students whose essays significantly outperform their academic records.

Evolution Note: Detection systems continuously evolve. Strategies working in current cycles might fail dramatically in future admissions periods.

AI Detection Technology Deep Dive

Modern AI detectors analyze multiple linguistic patterns simultaneously, examining statistical anomalies suggesting artificial generation. These systems evaluate sentence structure consistency, vocabulary distribution, and stylistic markers differentiating human and machine writing. However, they struggle with edited AI content and diverse writing styles.

Detection Mechanisms:

- Perplexity Analysis: Measures text predictability patterns

- Burstiness Evaluation: Analyzes sentence length variation

- Semantic Consistency: Checks logical flow and coherence

- Stylistic Fingerprinting: Identifies AI-typical language patterns

Current Limitations: Detectors miss approximately 15-20% of AI-generated content, particularly when students make manual edits. The technological arms race between AI generation and detection continues escalating.

Performance Statistics: Independent testing showed systems identifying 99.5% of AI-written abstracts with no false positives among human-written text, according to GPTZero benchmarking data.

For students exploring AI detection methods, understanding these mechanisms helps maintain ethical boundaries.

Expert Statistics Roundup

Usage Growth:

- 2023: 28% of colleges used AI detection tools

- Mid-2023: 40% adoption rate

- 2024: Projected 80% usage by admissions offices

- 2025: Expected to exceed 90% institutional adoption

Detection Accuracy Ranges:

- Turnitin AI: 85%+ claimed accuracy

- GPTZero: 99% accuracy with <1% false positives

- Multiple tools tested: 55.29% to 97% accuracy range

- Human review: Variable but generally more reliable

False Positive Concerns:

- Even 1% false positive rate affects 10 students per 1,000 essays

- International students face higher false favorable rates

- Non-native English patterns frequently trigger detectors

Frequently Asked Questions

Can colleges detect ChatGPT specifically?

No, detectors generally identify AI-generated patterns, not specific tools. ChatGPT, Claude, and Gemini produce similar linguistic signatures triggering detection systems.

What if I only used AI for brainstorming?

Policy compliance depends on the target school’s requirements. Some permits offer brainstorming assistance, while others prohibit any AI interaction. When uncertain, avoid AI entirely.

Do colleges check supplemental essays, too?

Yes, all essay components undergo identical screening processes. Short responses and supplemental prompts receive the same scrutiny as personal statements.

Will using Grammarly get me flagged?

Basic grammar checking rarely triggers AI detectors, but Grammarly’s advanced rewriting features might. Limit use to simple spell-check functions.

Can I appeal a false positive?

Absolutely. Provide comprehensive documentation of your writing process, including drafts, timestamps, and revision history. Most false positives resolve successfully with proper evidence.

Do international students face higher unfavorable rates?

Yes, non-native English patterns frequently trigger AI detectors incorrectly. International students should prepare extensive documentation for the e-writing process.

Top 5 Red Flags Admissions Officers Cite

- Generic AI-ish phrasing: Overuse of phrases like “in today’s society” or “throughout history”

- Sudden style shifts: Vocabulary jumps inconsistent with academic record

- Templated moral conclusions: Cookie-cutter life lessons that feel manufactured

- Unearned wisdom claims: Insights that seem too mature or philosophical for genuine teen experience

- Perfect structure without personality: Flawless organization lacking an authentic voice

The Bottom Line: Authenticity Strategy

The AI detection landscape will continue evolving. Colleges invest heavily in these systems because authentic voice remains the cornerstone of holistic admissions evaluation. Genuine experiences, honest reflections, and natural writing style create connections no AI can replicate.

Successful students aren’t those with perfect prose, but those whose personalities emerge through imperfect sentences. Admissions officers recognize authentic vulnerability, genuine excitement, and real growth patterns from considerable distance.

Strategic Recommendation: Write your own essays. Tell your own stories. Trust that authentic voice, including grammatical errors, carries more weight than AI-perfected content.

The future of college admissions belongs to students brave enough to be themselves on paper. Don’t let artificial intelligence steal your authentic competitive advantage.