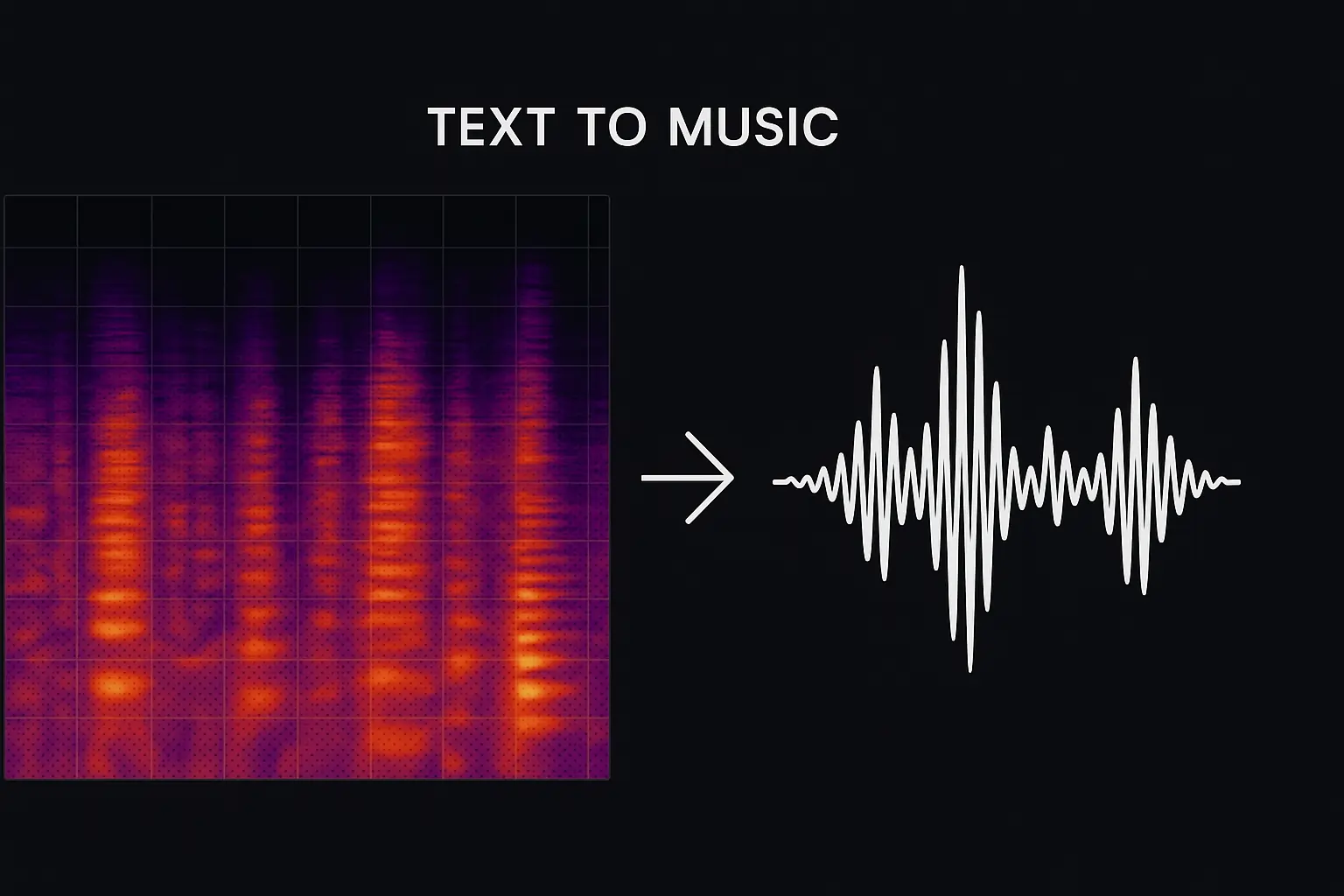

Riffusion is an open-source AI tool that generates music from text prompts by converting them into spectrograms, which are then transformed into sound. Unlike traditional music production,

Riffusion uses AI-driven models like Stable Diffusion to create unique audio clips. AI in music production has grown significantly in recent years, with models like Riffusion providing new avenues for creativity. This guide explains how Riffusion works and its applications for creators in 2025.

What Is Riffusion and Why Was It Created?

Riffusion is an open-source AI tool that converts text prompts into music using diffusion models. It was created to explore how AI can generate musical compositions from textual descriptions, utilizing the same technology that powers image generation, like Stable Diffusion.

Riffusion emerged from the intersection of image-generation AI and music, designed to tackle how we can represent sound visually through spectrograms. Instead of working directly with audio files,

Riffusion first creates a visual representation (spectrogram) of sound, which is then transformed back into audio. This process is similar to what image-generation models do when creating visuals from text.

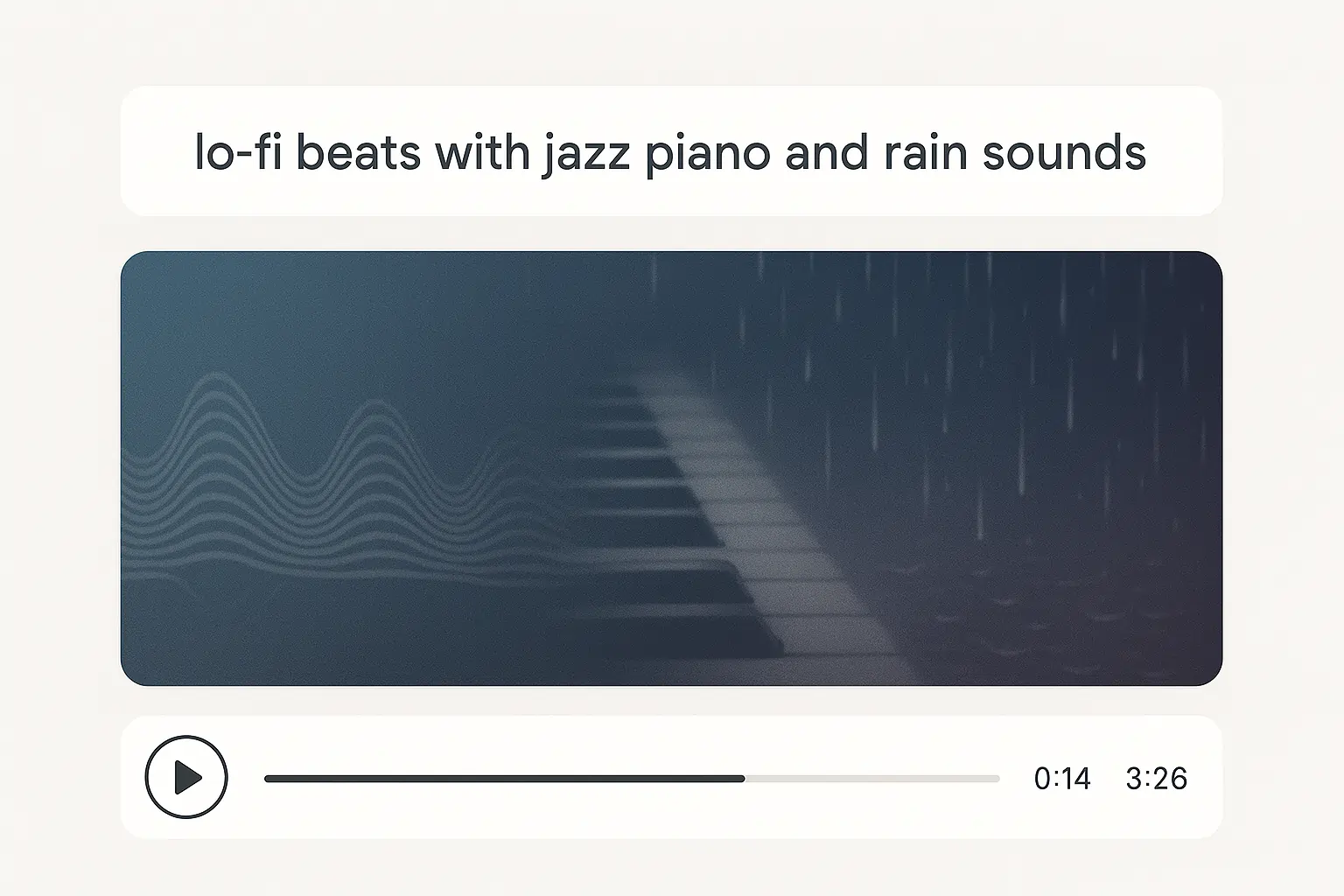

For instance, you could input a text prompt like “soft jazz with rain in the background” into Riffusion, and it will generate a unique audio clip that fits the description. This model showcases the potential of text-to-music AI in a way that’s both innovative and user-friendly. (Source: Riffusion GitHub Repo)

How Does Riffusion Turn Text into Music?

Riffusion works by translating your text prompts into spectrograms, which are visual representations of sound.

It then uses a diffusion model to iteratively refine these spectrograms before converting them into audio.

Let’s break it down:

-

You provide a detailed text prompt (like “lo-fi jazz with a smooth piano riff”).

-

Generate Spectrogram: The AI uses Stable Diffusion’s image-generation technology to map that text into a spectrogram (a visual representation of frequencies). The AI uses Stable Diffusion’s image-generation technology to map that text into a spectrogram.

-

Refining Process: Riffusion applies a noise-reduction process (diffusion) to create more musicality and refine the sound.

-

Conversion to Audio: Once the spectrogram is ready, the model uses the inverse Fourier transform to convert the image into sound.

Snippet Bait – Step-by-Step Process:

-

Step 1: Text → Spectrogram.

-

Step 2: The Diffusion model refines the spectrogram.

-

Step 3: Spectrogram → Audio output.

If you type in “ambient sound with ocean waves and birds chirping,” Riffusion will create a sound clip that combines these elements, giving you an atmospheric track. (Source: Stable Diffusion – arXiv)

How to Use Riffusion in 2025 (Step-by-Step Guide)

Riffusion can be used directly through its web app for simple music creation or installed locally for more advanced control and longer compositions.

You can either experiment with Riffusion via their online web demo or set it up locally if you’re comfortable with a bit of coding.

Here’s a quick guide to both methods:

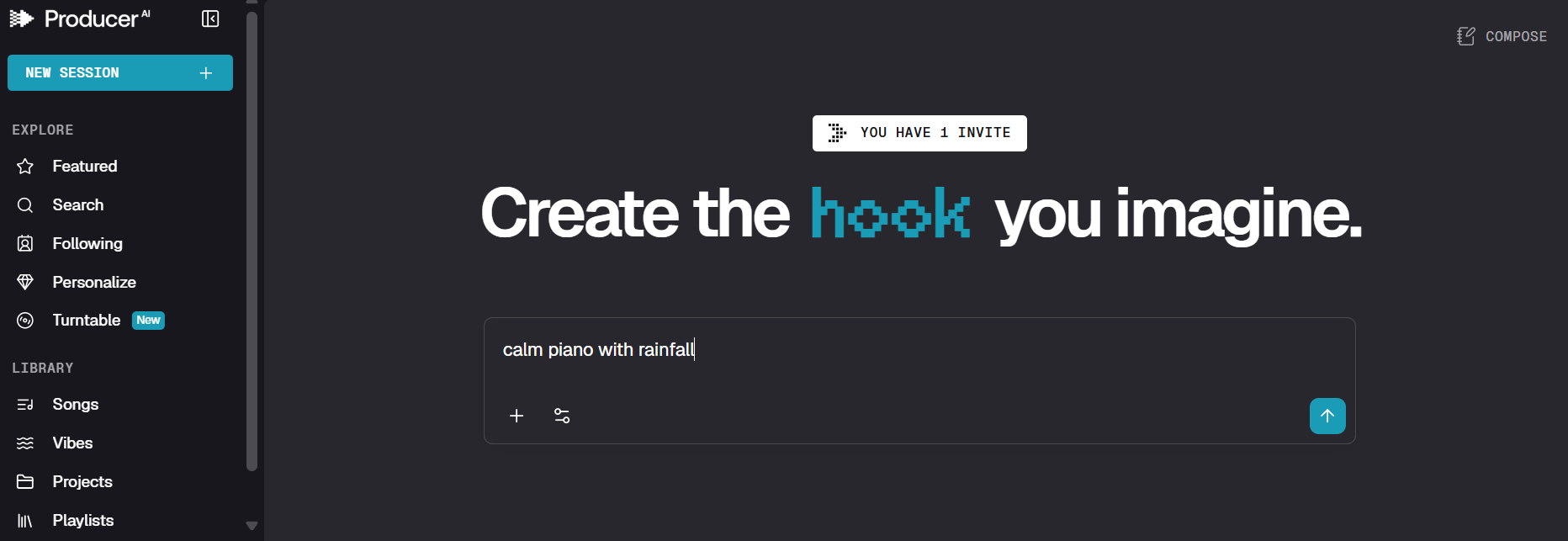

Using Riffusion Online (Easiest Way)

1. Visit the Riffusion website at riffusion.com.

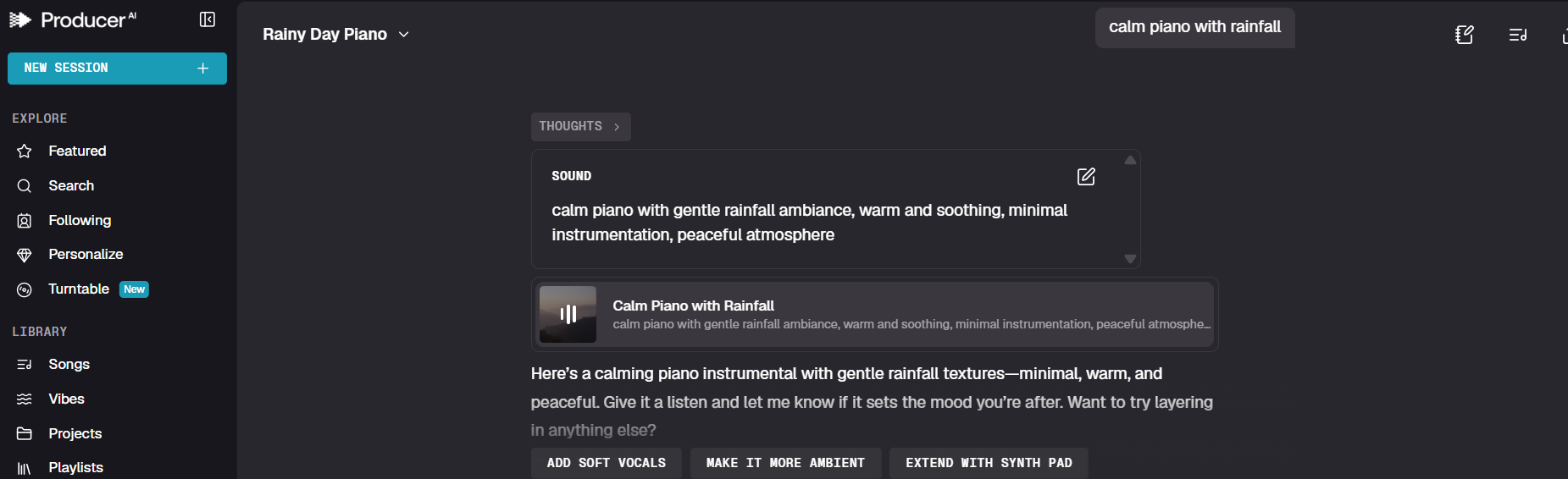

2. Enter a Text Prompt: Type in your musical request (e.g., “calm piano with rainfall”).

3. Generate Music: Click “Generate,” and within seconds, you’ll have a music clip ready to listen to and download.

Running Riffusion Locally [Advanced]

-

Requirements: Ensure your system meets the following specifications: Python 3.10+, PyTorch, and a GPU with at least 6 GB VRAM for faster processing.

-

Install Locally: Clone the Riffusion GitHub repo.

-

Open a terminal and type:

-

-

Generate Music: After setup, use the terminal to run prompts and create tracks.

While the web demo is quick and easy, running Riffusion locally provides more control, such as adjusting tempo or experimenting with different seed values to achieve more unique outputs.

What Are the Key Features and Limits of Riffusion?

Riffusion’s key features include fast music generation, an open-source model, and the ability to create loops and instrumentals. Its limitations involve a lack of vocals and short clip lengths.

Riffusion is excellent for generating quick instrumental loops, perfect for creators who need background music or who want to experiment with sound design. However, its focus is on creating shorter audio clips, making it less ideal for full-length compositions.

Combining Riffusion’s music generation with AI sound effects for video can create a dynamic soundtrack.

Strengths:

-

Free & Open Source: Under the MIT License, making it accessible to all.

-

Speed: Creates music in under 30 seconds.

-

Versatile: Ideal for experimentation, especially for ambient or instrumental tracks.

Limitations:

-

No Vocals: Riffusion currently can’t generate realistic human vocals.

-

Short Clips: Music output is often limited to loops or short samples.

-

No Fine-Tuning: It lacks advanced control for adjusting aspects such as mix balance or mastering.

If you need a quick lo-fi loop for a study video or podcast, Riffusion works wonders. However, if you’re looking for full songs with vocal performances, you’ll need to look elsewhere.

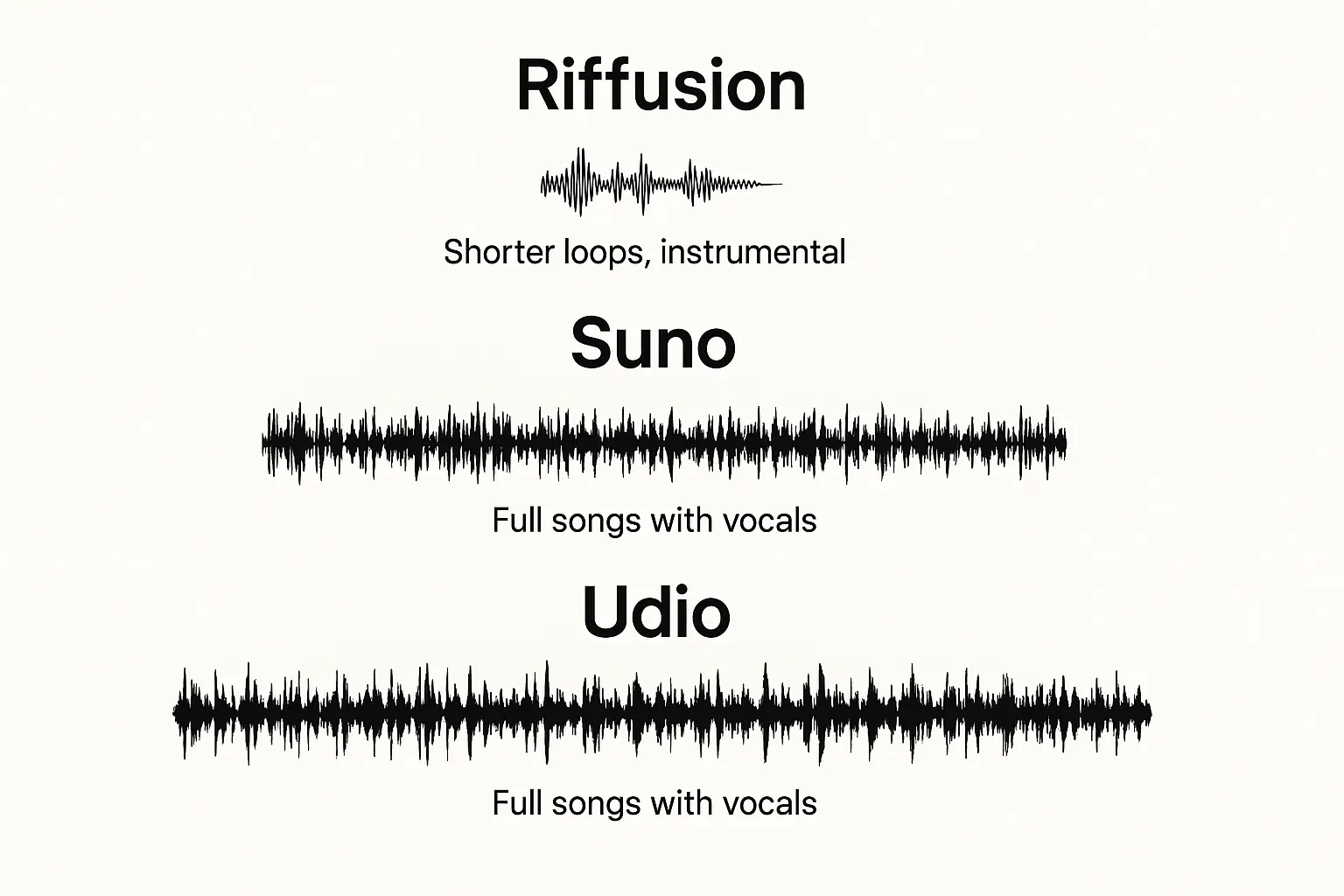

Riffusion vs. Other AI Music Generators (2025 Comparison)

Compared to tools like Suno or Udio, Riffusion is more minimalistic and more uncomplicated to use, but it lacks some of the depth and control that come with commercial tools.

Riffusion’s simplicity and open-source nature make it a favorite for creators who want to turn ideas into music quickly. However, more advanced platforms like Suno and Udio offer longer tracks and better vocal synthesis.

| Feature | Riffusion | Suno | Udio |

|---|---|---|---|

| License | Open Source (MIT) | Paid (Commercial Use) | Paid (Commercial Use) |

| Vocals | Simulated, No Real Voice | Full Vocal Support | Full Vocal Support |

| Output | Loops & Short Clips | Full Songs | Full Songs |

| Ideal For | Lo-fi, Ambient, Experimental | Commercial Music, Artists | Music Production, Remixes |

For quick, simple loops, Riffusion is the go-to. But if you need vocal tracks or more extended compositions, Suno or Udio would be better suited.

Can You Sell or License Music Made with Riffusion?

Yes, Riffusion’s music is free for commercial use, as it operates under the MIT License, which allows for redistribution and modification. (MIT License – Open Source Initiative)

Many users might wonder if they can use the music they create in videos, commercials, or other content for profit. Riffusion’s open-source license gives creators the freedom to sell their AI-generated tracks, as long as they provide proper attribution to the creators of the tool.

Some independent artists use Riffusion to generate background music for their YouTube videos, while others integrate it into larger compositions. Because it’s open-source, they can use the output for commercial purposes without issue.

How Do Prompts and Seeds Affect the Output?

The text prompts you provide and the “seed” number you choose directly influence Riffusion’s output, affecting genre, mood, and instrument selection.

When you enter a text prompt into Riffusion, the AI interprets it to generate a corresponding sound. However, the seed (a random number) can be adjusted to alter the music, producing varied outputs for the same prompt.

This level of customization makes Riffusion especially interesting for creators looking for unique results with every interaction.

How Prompts Influence Music Generation

-

Descriptive Prompts: The more detailed your prompt, the more specific the output. For instance, “chill beats with a jazzy piano” will give a different result than just “chill beats.”

-

General Prompts: Vague inputs can lead to more generic outputs, such as a random, simple melody.

How Seeds Influence Variation

-

Same Prompt, Different Seeds: By adjusting the seed, you change how the AI interprets your prompt. Two different seed numbers for “lo-fi jazz” might generate two entirely different sound profiles.

A prompt like “uplifting piano melody with light percussion” with seed one may produce a soft, classical-sounding piece. In contrast, seed 42 could generate a jazzy, rhythmic variation with more complex percussion.

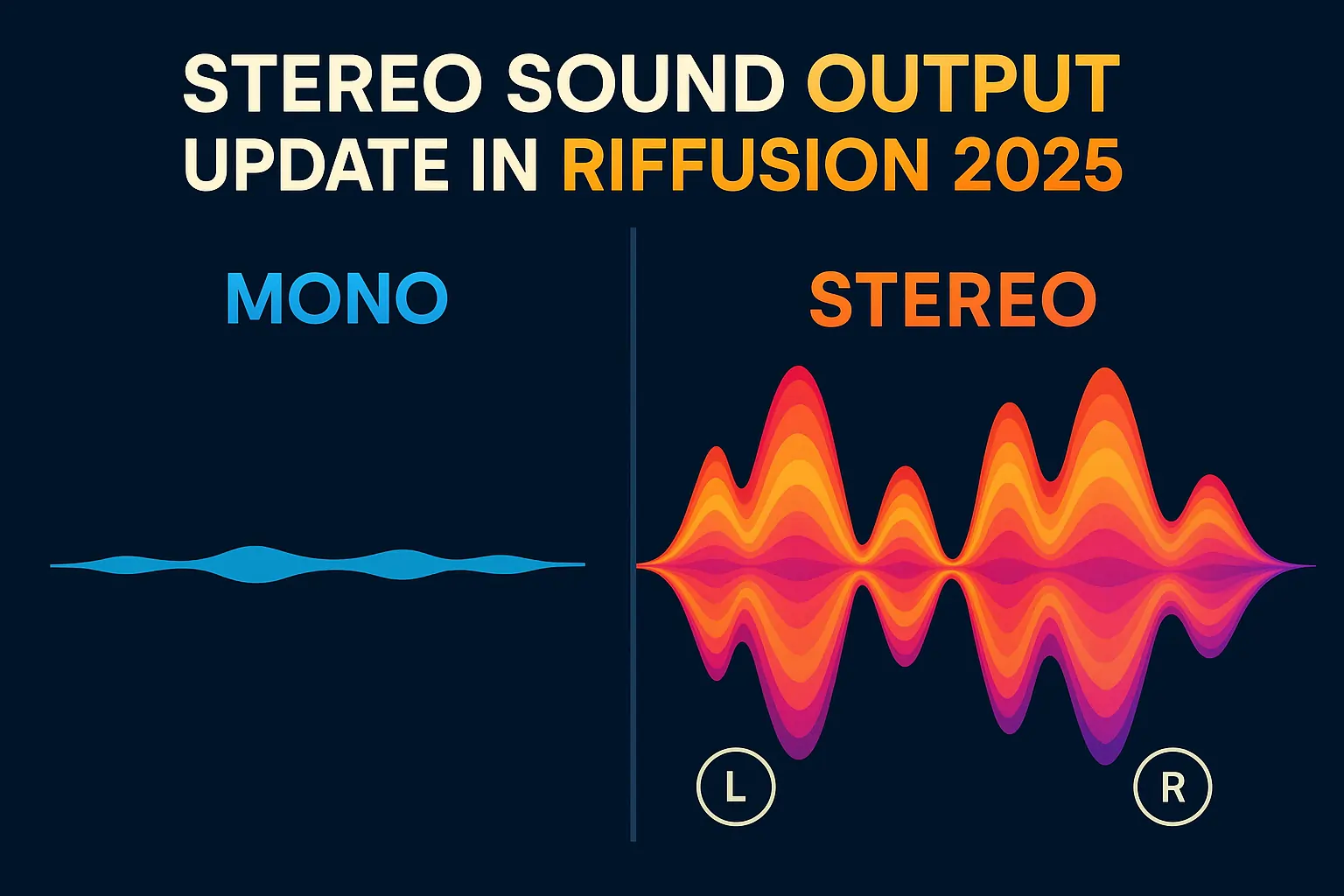

What’s New in Riffusion (2025 Update)?

Riffusion has evolved significantly in 2025, with updates that enhance sound quality, add stereo output, and support more extended compositions.

The latest Riffusion v1.5 upgrade brings several essential improvements—the most notable being stereo output for a richer sound and the ability to generate longer musical tracks.

These improvements allow the AI to create more immersive audio experiences, making it more versatile for music producers who need a fuller sound.

Key Features in 2025

-

Stereo Sound: Output now supports stereo channels, adding depth to the music.

-

Longer Clips: Previously limited to loops, Riffusion can now generate full tracks up to 3 minutes long.

-

Improved Accuracy: The model’s refinement process has been optimized to produce more transparent and coherent music.

Before this update, Riffusion could only generate short loops. Now, a user can enter a detailed prompt, such as “3-minute lo-fi chill beat with atmospheric sounds,” and receive a full-length track with more complexity and texture.

The Future of AI Music Creation: Riffusion’s Role

AI music generation, like Riffusion, is pushing the boundaries of creative expression, offering musicians a tool for collaboration and innovation in 2025 and beyond. (AI Music Market Trends – Statista)

AI in music is not about replacing human creativity but enhancing it. With advancements in AI, we are seeing hybrid music compositions where humans and AI collaborate.

Riffusion, alongside tools like Suno and OpenAI’s Jukebox, is part of a growing trend that allows musicians to explore new sounds and ideas that were previously unimaginable.

Emerging Trends in AI Music

-

AI-Human Collaboration: Music producers will increasingly use AI to generate elemental compositions that they can build on and refine.

-

Real-Time Music Generation: In the future, AI like Riffusion might be used for live performances, where AI dynamically adjusts the music based on audience mood or input.

-

AI-Driven Licensing: As AI tools become more mainstream, a standardized system for licensing AI-generated music will likely emerge, enabling creators to sell and use it without concerns.

Imagine an AI-powered music app that listens to your voice and generates a custom melody based on your mood. This is a future direction for Riffusion-like models, where music adapts to individual emotional states in real-time.

What Are the Pros and Cons of Using Riffusion for Music Creation?

Riffusion offers unique advantages for creators, but it also has certain limitations, especially for those seeking complete control over their compositions.

Let’s weigh the pros and cons of using Riffusion:

Pros:

-

Free and Open-Source: Accessible for anyone to experiment with.

-

Fast and Efficient: Quickly generates music, ideal for prototyping.

-

Creative Exploration: Ideal for creators looking to experiment with sound and genre mixing.

Cons:

-

Lacks Vocal Support: Cannot generate human vocals or full lyric-based tracks.

-

Limited Control: While prompts and seeds influence the outcome, there’s no fine-tuning of specific musical elements.

-

Short Track Lengths: Primarily focused on loops or brief audio clips, which may not be suitable for all projects.

If you’re a video producer needing background music for a tutorial or podcast, Riffusion is an excellent choice. However, for full-length compositions with vocals, you’ll need to explore more advanced AI tools.

Can You Sell or License Music Made with Riffusion?

Yes, Riffusion music can be used commercially, thanks to its MIT License, which allows for modification and redistribution.

The open-source MIT License offers a significant advantage to creators by permitting commercial use. This means you can integrate Riffusion-generated tracks into YouTube videos, podcasts, or even sell them in online marketplaces. Just ensure proper attribution is given to Riffusion as the original creator of the music tool.

Independent filmmakers often use Riffusion to create royalty-free background music for their content, ensuring their projects have a unique musical score without the costs of licensing traditional music.

Should You Use Riffusion for Your Music Projects?

Riffusion is an incredible tool for musicians, creators, and anyone interested in AI-generated music. While it may not replace professional music production tools, it offers a creative and accessible way to generate unique music quickly.

For creators who need quick, royalty-free background music or who want to experiment with AI’s capabilities in music production, Riffusion is an invaluable resource. However, if you require full vocal tracks or extensive control over your compositions, you may need a more advanced tool.

Riffusion represents the future of AI in music: a tool for creative exploration that allows musicians to push the boundaries of what’s possible with artificial intelligence.

Final Thoughts:

AI music is still in its infancy, but as Riffusion evolves and more features are added, we’ll likely see even greater integration of AI into mainstream music production. Whether for fun or professional use, it’s an exciting time to explore the potential of AI-generated music.