What is an “agentic reasoning” AI doctor?

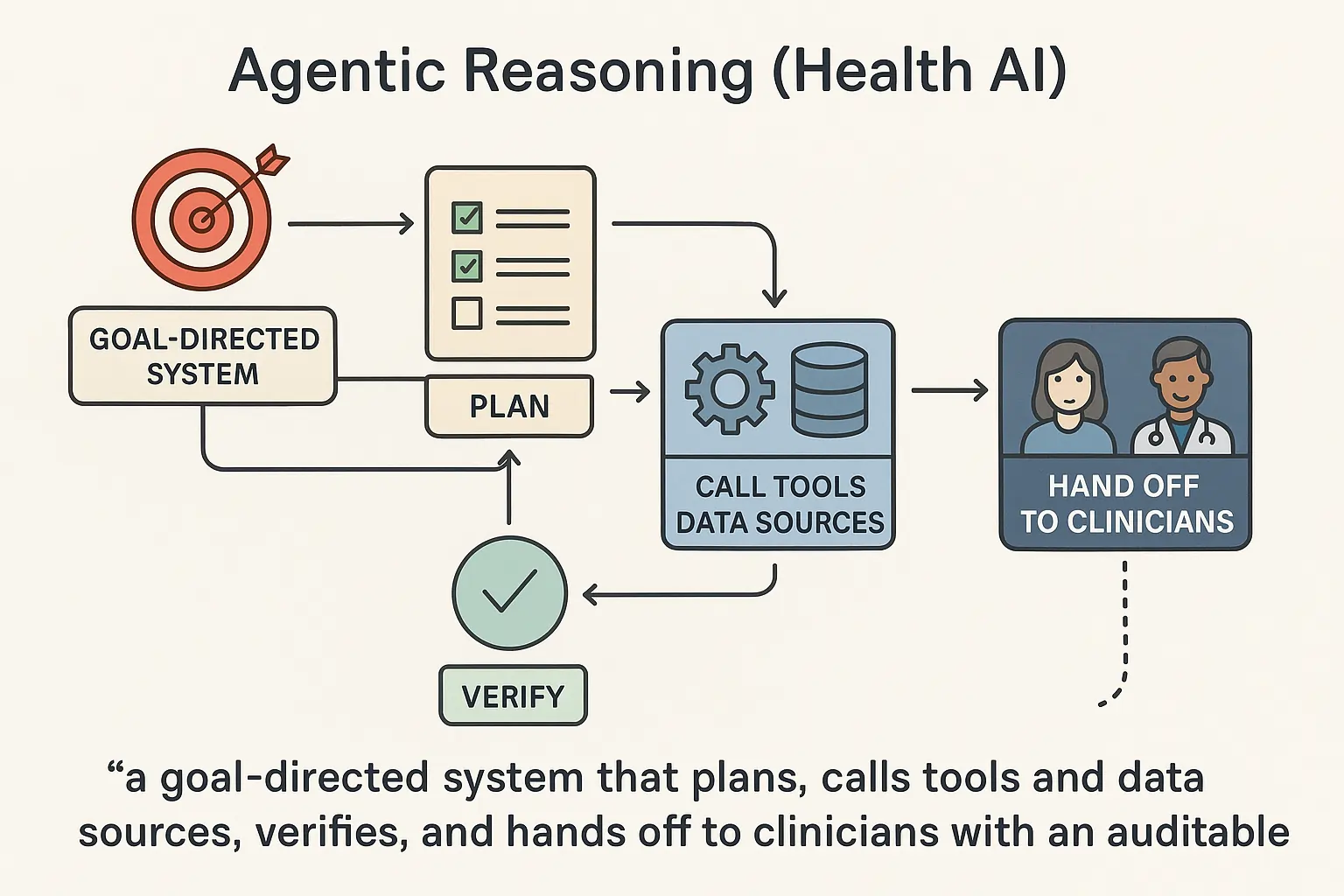

An agentic AI doctor is a goal-directed system that conducts stepwise medical reasoning, calls tools (EHR, knowledge bases, calculators), and proposes actions such as differential diagnoses or orders.

It’s not a replacement for clinicians; it augments them with transparent, auditable chains of thought and hand-offs to humans under U.S. safety, privacy, and billing rules.

Unlike rule-based decision support, agentic systems run loops: gather → reason → verify → act → learn. In medicine, this involves history-taking, evidence-based decision-making, ordering simulations, and structured hand-offs. The promise is faster, safer decisions and fewer clerical burdens. The risk is over-reliance without robust governance.

Google Research’s AMIE is a research LLM optimized for diagnostic dialogue and reasoning. It learned via simulated self-play to improve history-taking and clinical reasoning.

“AMIE was evaluated on axes including history-taking, diagnostic accuracy, and empathy” (Google Research/DeepMind, 2024). Google ResearcharXiv

How do agentic AI doctors actually work in a clinic?

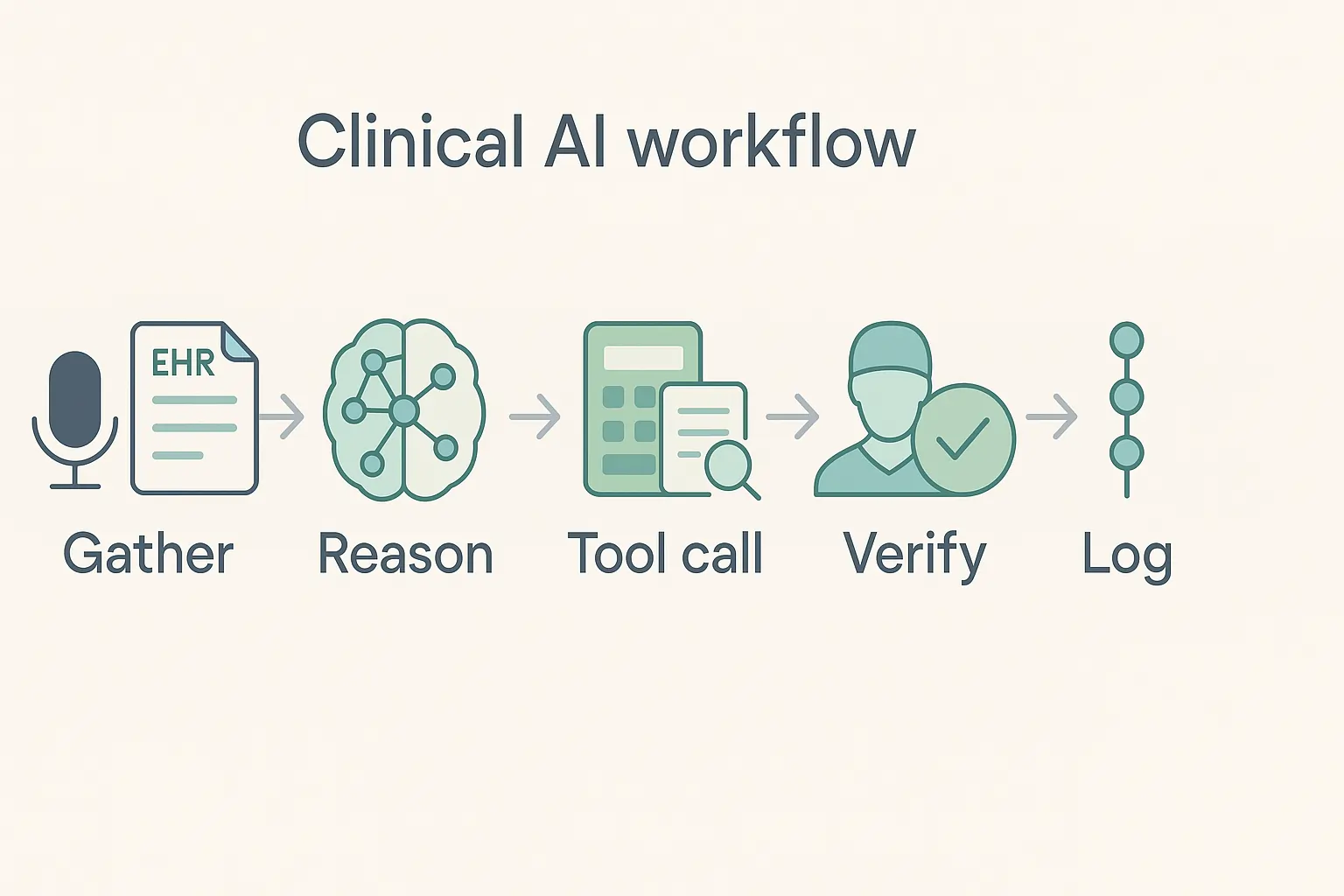

They orchestrate subtasks: interview the patient, parse vitals/labs, search evidence, generate a differential, and draft plans. They utilize tools such as EHR APIs, medical calculators, and literature agents, and route steps to humans before any order or prescription.

Auditable logs (prompts, tool calls, sources) support compliance and peer review. Integration typically starts with ambient documentation and triage; then it advances to diagnostic assistance in governed sandboxes.

Agentic stacks combine conversational intake, retrieval-augmented generation (RAG), structured output (FHIR), and role-based guardrails. In 2024–2025, many U.S. systems started with ambient scribing to reduce note time, then piloted diagnostic agents in restricted settings.

Nuance DAX Copilot (Epic) automates clinical documentation and is generally available across more than 150 health systems, embedding into Epic workflows.

“Over 150 health systems adopted DAX Copilot GA in January 2024” (Nuance/Microsoft). Nuance MediaRoom

What benefits should U.S. providers expect in 2025?

The clearest wins are documentation time savings, more consistent histories, and quicker differentials in complex cases. Early results show strong potential in diagnostic orchestration under constraints and better patient engagement via voice agents. Compliance teams also benefit from audit trails. Financial upside comes from recovered clinician time and fewer unnecessary tests if governance is tight.

Published and reported studies highlight promising but still emerging signals. Microsoft’s orchestration results in case-bank challenges, and AHA-reported voice-agent pilots show gains in adherence reporting. Clinical validation and bias monitoring remain essential before broad deployment.

AI voice agents for hypertension follow-up prompted seniors to accurately report readings and improved management in preliminary AHA 2025 findings. News-Medical

“Orchestrated multi-agent system achieved 85.5% correct diagnoses on NEJM case sets under constraints” (Financial Times report on Microsoft MAI-DxO, 2025).

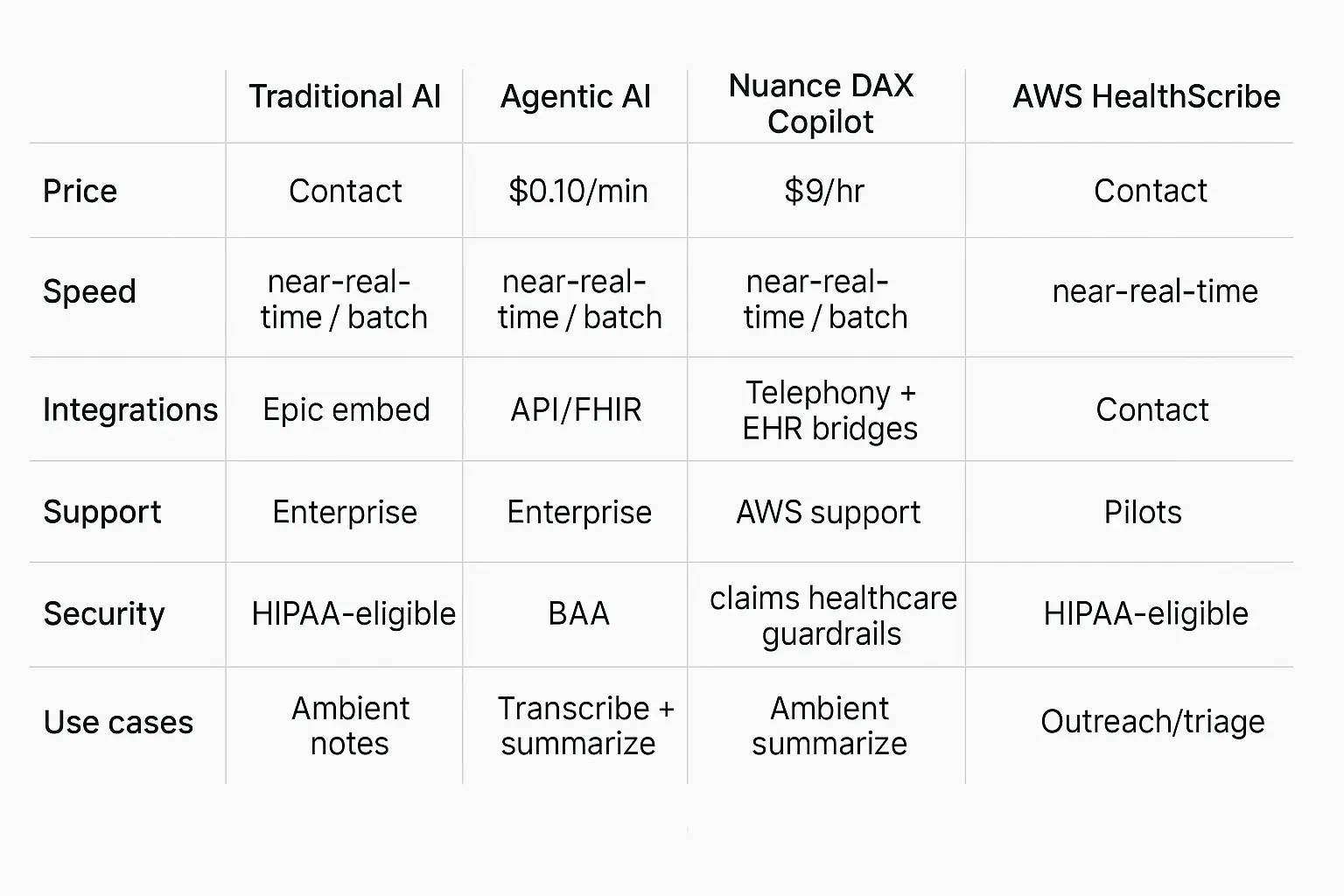

Which real systems lead today, and how do they compare?

Use ambient scribe tools for rapid ROI; evaluate diagnostic agents as research-grade. In the U.S., Nuance DAX Copilot (Epic) and AWS HealthScribe are key players in documentation. Hippocratic AI and Microsoft’s MAI-DxO push agentic assistants and diagnostic orchestration, respectively.

AMIE remains research-only. Compare them on price transparency, real-time speed, EHR integrations, enterprise support, security attestations, and sanctioned use cases.

Comparison table (consistent attributes).

* Pricing notes: AWS list pricing is public; Nuance/enterprise varies by contract; Hippocratic rate reported by company and media; diagnostic agents are largely research-only.

Sources: AWS pricing; Nuance GA release; Advisory/press on Hippocratic $9/hr; FT/BI on MAI-DxO. Amazon Web Services, Inc.

How to implement an agentic AI doctor safely (HowTo)

Start with low-risk, high-ROI jobs (ambient notes), then add agentic triage or guideline checkers in a sandbox. Use a risk-tiered governance model, PCCP-ready change controls, and publish your model cards. Integrate via FHIR APIs and keep a human in the loop for any diagnostic or treatment action. Measure time saved, error rates, and user satisfaction.

For a checklist of encryption, logging, and PHI boundaries, see our RAG security guide.

A practical U.S. rollout aligns with the FDA’s 2024 guidance on Predetermined Change Control Plans (PCCP) for AI-enabled software, balancing iteration with safety. Pair that with HIPAA controls, vendor BAAs, and bias monitoring.

Tool/Example (workflow).

-

Step 1: Pilot DAX Copilot in one clinic; success metric = note finalization time.

-

Add AWS HealthScribe for specialties without Epic and compare the cost per minute.

-

Step 3: Trial a voice agent (Hippocratic or equivalent) for BP outreach; track adherence.

-

Step 4: Evaluate diagnostic orchestration in a research setting, focusing on case sets.

“FDA finalized PCCP guidance for AI-enabled device software functions in Dec 2024” (FDA, 2024). U.S. Food and Drug Administration

What are the most significant risks, ethics, and compliance issues?

Top risks are data leakage, hallucinated recommendations, bias across subpopulations, and unclear accountability. U.S. compliance teams should require BAAs, audit logs, provenance links, and model update notices tied to a PCCP.

Ethically, disclose AI use to patients, provide opt-outs, and provide human oversight. For workforce impact, engage nursing and physician groups early and address job design—not just automation.

Evidence is mixed: some systems show significant gains under constraints; other analyses caution against over-claiming diagnostic “superintelligence.” Nursing unions have also raised concerns about AI nurse agents and care quality.

Build a safety case before scaling.

Governance checklist: BAA in place; ePHI data map; prompt & tool logs retained; decision provenance surfaced to the clinician; patient-facing disclosure language approved by compliance. Map vendor attestations to SOC 2 controls so audits pass without surprises.

“Systematic reviews in 2024 emphasize rigorous, context-specific evaluation before clinical use” (JAMA, 2024). JAMA Network

Beginner mistakes to avoid (troubleshooting mini-section)

-

Treating a research demo like a medical device. Require clinical validation and guardrails first. (JAMA, 2024)

-

Skipping BAAs and HIPAA security reviews. Use only HIPAA-eligible services for ePHI; confirm vendor attestations (e.g., AWS HealthScribe).

-

No human-in-the-loop for diagnostic support. Keep clinician sign-off.

-

Unclear patient consent. Disclose AI involvement in intake and scribing.

-

No PCCP for updates. Tie model changes to monitored metrics and rollback plans (FDA, 2024).

Real-world examples you can learn from (with proof items)

Focus on documented deployments and published evaluations. Use ambient scribing where GA exists and pilot voice agents with clear outcomes. Treat diagnostic orchestration as research until it is peer-reviewed. Anchor every claim to a public source, and refresh stats at least twice a year.

Examples:

-

Epic + Nuance DAX Copilot — GA; >150 orgs announced; enterprise support; Epic embed.

-

AWS HealthScribe — public pricing; HIPAA-eligible; API-first note generation.

-

Voice agents for BP — preliminary AHA 2025 reporting on adherence/accuracy.

-

Multi-agent diagnostic orchestration (MAI-DxO) — 85.5% accuracy on NEJM cases under constraints (media reports).

-

AMIE — diagnostic dialogue research; not a product.

Refresh notes :

Nuance GA count (Jan 2024) — refresh yearly. Nuance MediaRoom

AWS HealthScribe price — recheck quarterly. Amazon Web Services, Inc.

Hippocratic $9/hr claim — confirm each quarter. Advisory Board

MAI-DxO accuracy — watch for peer-review. Financial TimesBusiness Insider

AMIE evaluations — check for 2025 updates.

Short “why it matters”

-

Faster notes; fewer clicks.

-

Clearer histories; better differentials.

-

Auditable agents; safer iterations (PCCP).

-

Start with scribing; grow to orchestration.

How much will this cost in 2025, and what’s the ROI?

Expect three cost types: usage fees for voice or scribing, platform or seat licenses, and integration work. Public pricing anchors: AWS HealthScribe bills about $0.10 per audio minute; some voice-agent vendors market ~$9 per agent-hour.

ROI comes from minutes saved per note, fewer no-shows, and faster follow-ups. Start with documentation to realize value within weeks.

Budget for a pilot clinic first. Measure baseline note time, after-visit documentation, and staff callback minutes. Stack small wins: ambient scribing, then low-risk outreach agents, then clinician-in-the-loop diagnostic assistance. Keep procurement simple with HIPAA-eligible services and BAAs.

-

AWS HealthScribe for transcription + medical summarization at ~$0.001667 per second (≈$0.10/min). Integrates via API, evidence links included.

“Usage is billed in one-second increments at $0.001667 per second” (AWS, 2025). Amazon Web Services, Inc.

How do we integrate with our EHR and keep data safe?

Integrate through your vendor’s approved pathways: Epic embed for Nuance DAX Copilot, FHIR APIs for summaries and vitals, and secure cloud services that are HIPAA-eligible with BAAs.

Keep an audit trail of prompts, tool calls, and sources. Tie model updates to a Predetermined Change Control Plan (PCCP) so safety reviews and rollbacks are clear.

Use least-privilege service accounts, encrypt at rest and in transit, and store only the minimum needed. Log provenance links for every suggestion surfaced to clinicians. Publish a short “AI in care” notice in your intake packets.

-

Nuance DAX Copilot (Epic) for ambient notes inside Epic; widely announced GA in 2024 across 150+ orgs. Nuance MediaRoom

“More than 150 health systems set to deploy DAX Copilot embedded with Epic” (Healthcare Dive, 2024). Healthcare Dive

Can diagnostic agents really help today, or are they hype?

Treat diagnostic orchestration as research-grade in 2025. Microsoft’s MAI-DxO achieved 85.5% accuracy on challenging EJM cases under lab constraints, but it has been in-cleared.

Use agents to surface differentials, tests, and citations, but require clinician sign-off. Plan governance now so you can adopt safely as evidence matures.

Agentic “panels” of cooperating models show promise in constrained benchmarks. Clinical practice differs in several key aspects: missing context, comorbidities, and accountability. Keep humans in the loop.

-

Microsoft MAI-DxO diagnostic orchestrator (research). Financial Times

“85.5% vs ~20% physicians on 304 NEJM cases under restricted conditions” (FT; Business Insider, 2025). Business Insider

What about voice agents for outreach and follow-ups?

Voice agents can triage routine calls, collect vitals, and remind patients at far lower cost than human outreach teams. Several vendors tout ~$9 per agent-hour. Pilot on non-diagnostic tasks first, disclose AI usage, and route any red flags to staff. Track abandonment rate, accuracy of data capture, and patient satisfaction. Advisory Board

Scope matters. Use voice for refills, prep, chronic-care reminders, and education. Escalate pain, confusion, or abnormal readings to clinicians.

-

Hippocratic AI positions voice agents at $9/hour; multiple outlets and company materials cite this rate. Validate in contracts. Advisory BoardHippocratic AISacra

“Cost of operating Hippocratic’s AI agents is $9 an hour” (Advisory Board briefing, 2024). Advisory Board

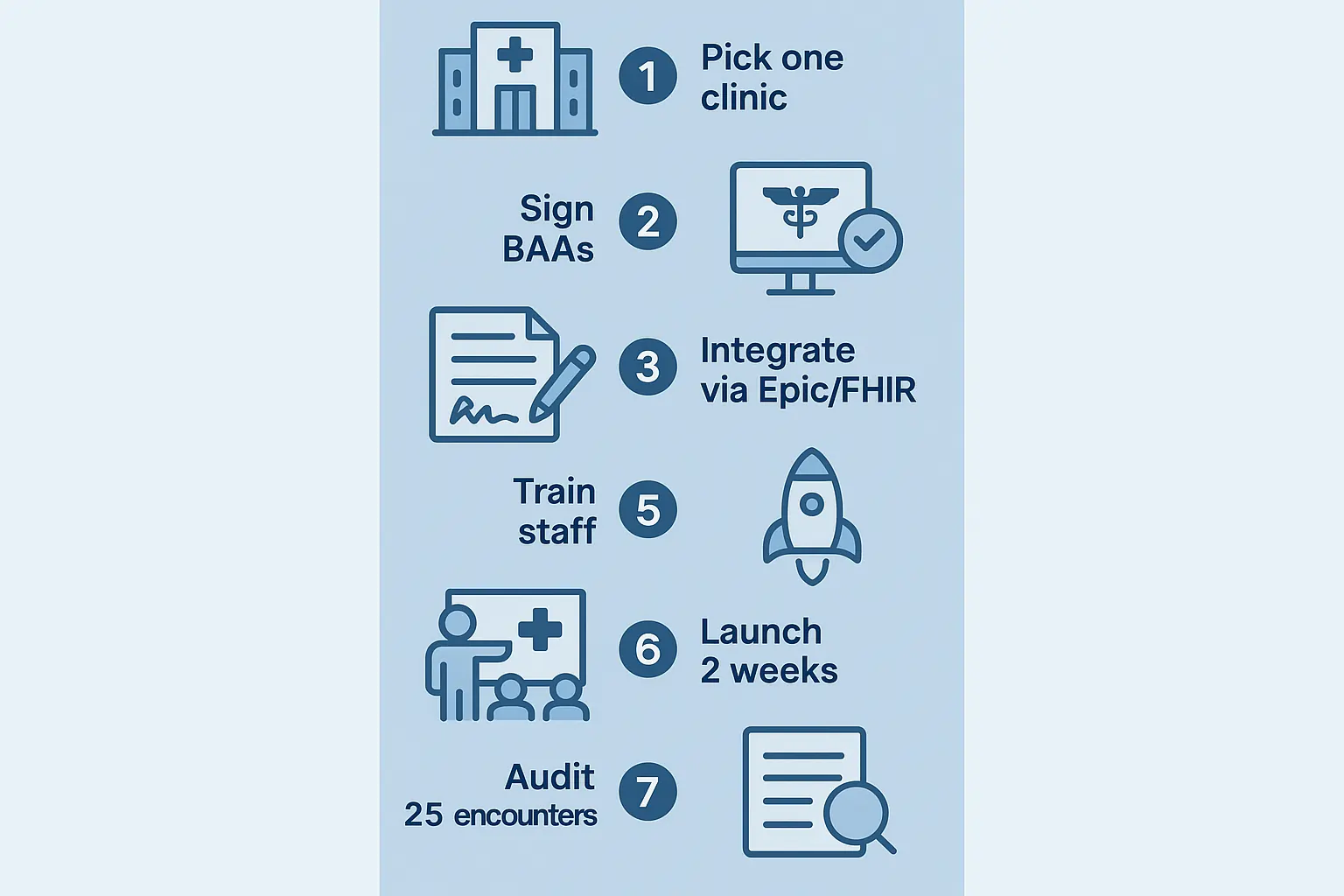

How to run a safe pilot, step by step (HowTo)

Start with one clinic and one use case. Use HIPAA-eligible services with a BAA, publish an AI disclosure, and measure before-and-after deltas. Keep a clinician in the loop. Review logs weekly. Tie any model change to a PCCP. Roll forward only when safety and satisfaction goals are met.

This path reduces risk and builds trust with clinicians and patients. It also speeds procurement because the scope is tight and metrics are obvious.

-

Pilot stack: DAX Copilot for notes → HealthScribe for non-Epic lines → one voice agent for outreach → analytics dashboard for KPIs.

“FDA finalized PCCP guidance for AI device software functions, Dec 2024” (FDA). GovDelivery

HowTo steps (agent-ready):

-

Select a pilot clinic and a use case.

-

Sign BAAs; configure HIPAA controls.

-

Integrate via Epic embed or FHIR API.

-

Train staff; publish patient disclosure.

-

Launch for 2 weeks; collect KPIs (note time, callbacks).

-

Audit 25 random encounters for accuracy and tone.

-

Review incidents; decide to expand, fix, or roll back.

FAQs:

Q1. Are agentic AI doctors legal to use in the U.S.?

A. Yes for documentation and intake (with BAAs, HIPAA controls). Diagnostic autonomy is research-grade; keep human sign-off and follow FDA guidance for AI software changes (PCCP). U.S. Food and Drug Administration

Q2. Will these AIs replace clinicians?

A. No. Current evidence shows augmentation benefits; empathy and nuanced judgment still require clinicians.

Q3: Is this legal to use?

A.Yes, for documentation and outreach with BAAs and HIPAA safeguards. Diagnostic autonomy remains research-grade; keep human sign-off. U.S. Food and Drug Administration

Q4: Will an AI decide my treatment?

A. No. It suggests options and citations. Your clinician decides.

Q5: Where is my data stored?

A. Encrypted cloud within HIPAA-eligible services, with audit logs and least-privilege access.

Q6: How much does it cost?

A: Transcription rates are about $0.10/min; some voice agents are near $9/hour. Your integration and support are separate.

Q7: Who reviews the AI’s work?

A: Your clinician. All notes and suggestions are reviewed before orders are placed.

Comparison table: pick the right tool for the job

use ambient scribing now; pilot voice outreach; keep diagnostic orchestration in research with IRB-style oversight.

| Platform (2025) | Price | Speed | Integrations | Support | Security | Use cases |

|---|---|---|---|---|---|---|

| Nuance DAX Copilot (Epic) | Contact sales | Near real-time | Epic embed | Enterprise | HIPAA, Microsoft controls | Ambient notes, SOAP drafts |

| AWS HealthScribe | ~$0.10/min | Near real-time | API + partner EHRs | AWS support | HIPAA-eligible | Transcribe, summarize, and evidence links |

| Hippocratic AI (voice) | ~$9/hour | Real-time | Telephony + EHR bridges | Enterprise pilots | Claims healthcare guardrails | Outreach, education, triage |

| Microsoft MAI-DxO | Research | Batch bench | Model-agnostic | Research | Not clinic-cleared | Diagnostic case orchestration |

| Google AMIE | Research | Simulated evals | N/A | Research | N/A | Diagnostic dialogue research |

Troubleshooting: common beginner mistakes

Do not pilot diagnostics without human oversight. Do not skip a BAA or HIPAA review. Do not deploy without an opt-out. Do not treat a lab benchmark as clinical proof. Tie all model changes to a PCCP, and keep rollback scripts ready. U.S. Food and Drug Administration

Most issues come from scope creep and unclear ownership. Assign a product owner and a clinical safety officer for the pilot.

-

Launch checklist: BAA, data map, audit logs, disclosure, metrics, and change control.

“Final PCCP guidance details info to include in marketing submissions” (FDA, 2024). U.S. Food and Drug Administration.