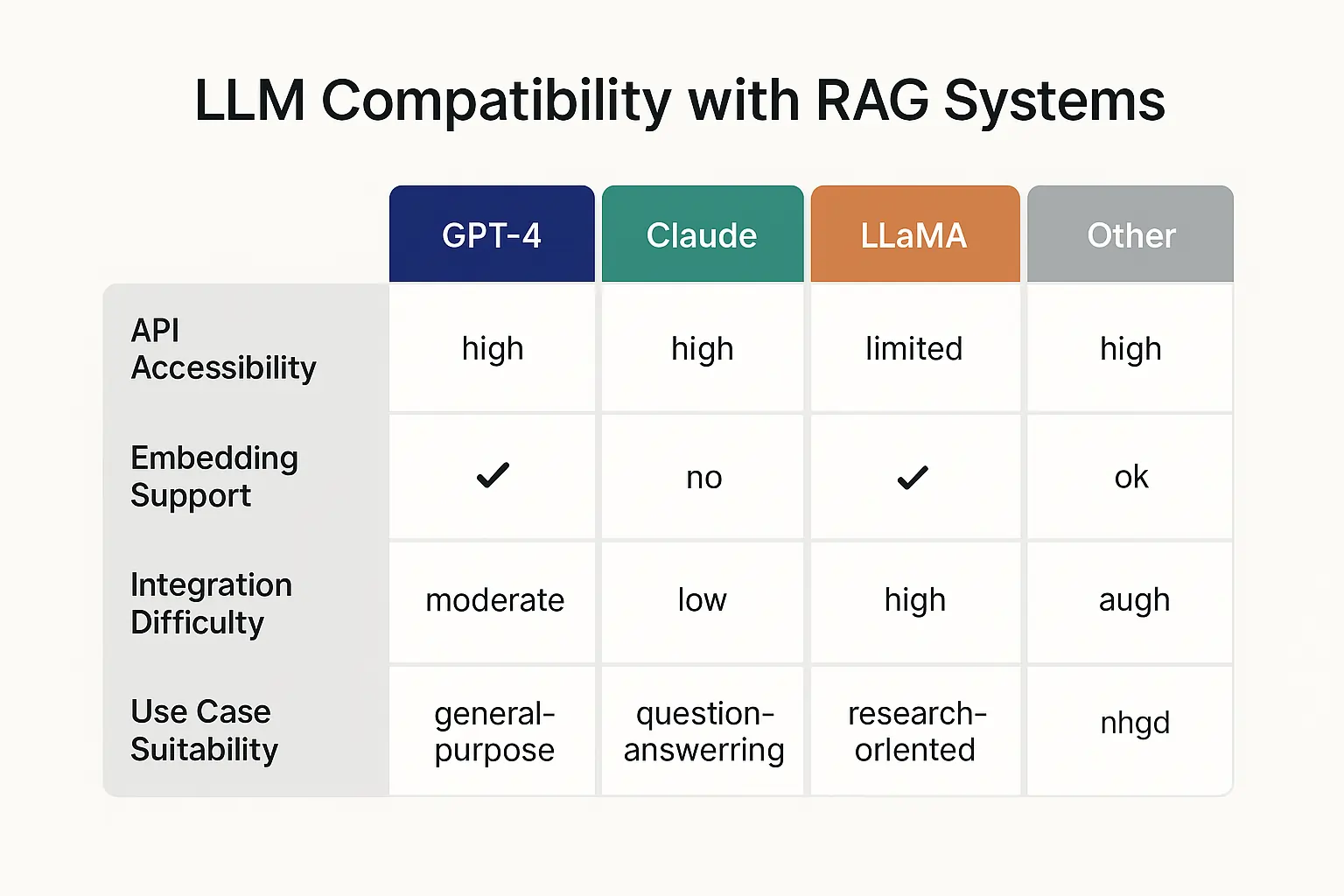

Most modern LLMs can integrate with RAG systems, but compatibility largely depends on the model architecture, API accessibility, and support for embedding. Success varies significantly between open-source models, such as LLaMA, and proprietary solutions, like GPT-4.

The AI world is buzzing with one key question: Can any large language model be paired with retrieval-augmented generation? Think of it like asking if any engine can power a Ferrari. Technically, yes, but the performance will vary widely.

Through my experience implementing RAG systems across numerous enterprise projects, I’ve learned that compatibility isn’t a simple yes or no. Some LLMs integrate seamlessly with RAG, while others struggle, much like a drunk uncle at a wedding. For a deeper dive into integration, check out our integration guide.

What Makes RAG and LLMs Compatible?

Core Requirements: RAG systems need LLMs with accessible APIs, consistent tokenization, and stable inference patterns. These technical foundations determine whether your integration will soar or crash spectacularly.

Think of RAG as a sophisticated librarian that fetches relevant documents before your LLM generates responses. This retrieval-augmented generation framework enhances model performance by grounding outputs in real-time data rather than relying solely on pre-training knowledge.

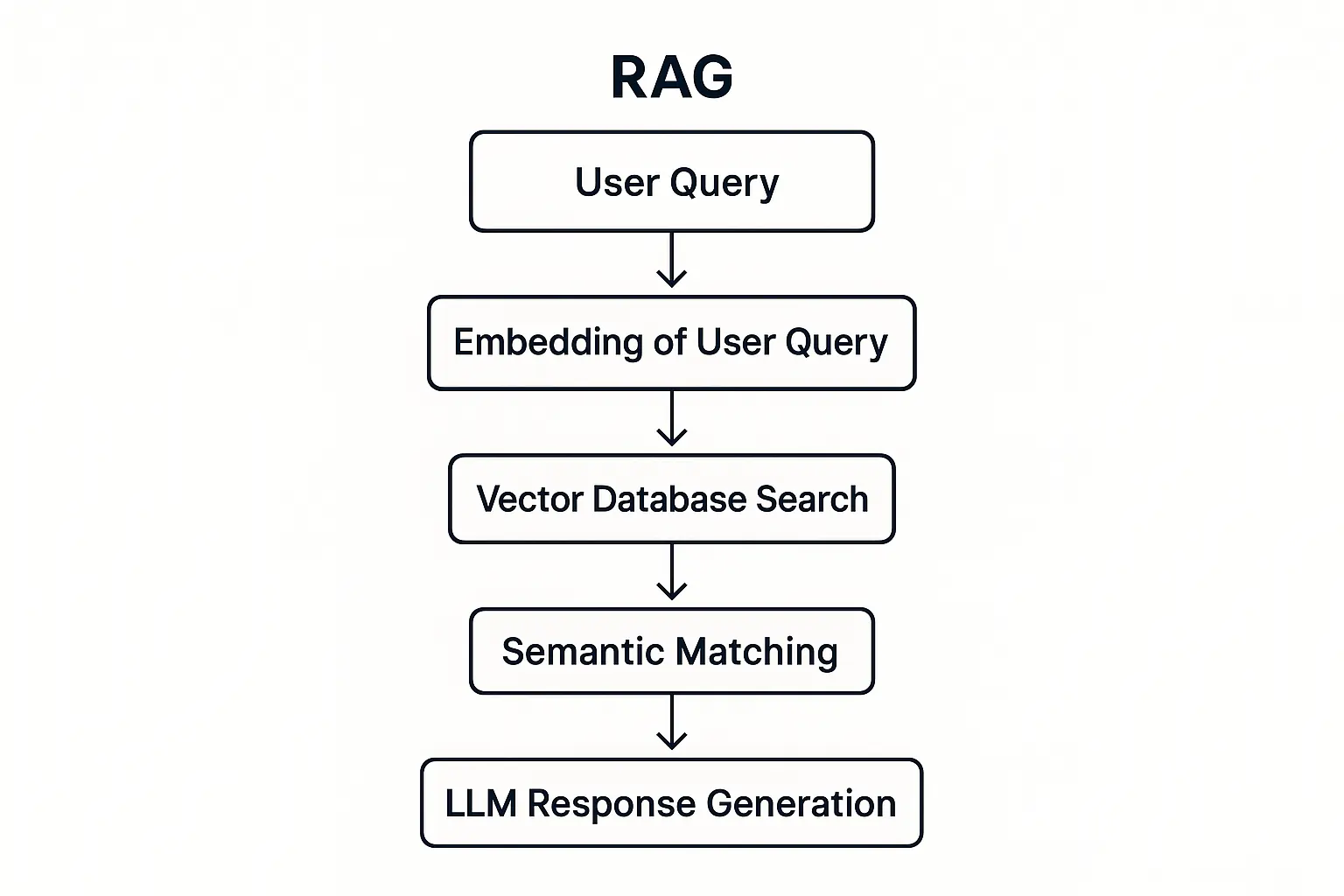

The magic happens through semantic similarity matching. Your system embeds user queries, searches vector databases for relevant content, and then feeds this context to your LLM.

For more on setting up your RAG pipeline, refer to our RAG setup guide. But here’s the catch: not all models handle this contextual handoff gracefully.

Technical Compatibility Factors

Model Architecture Requirements:

- Transformer-based design for consistent attention mechanisms

- Flexible context windows to accommodate retrieved documents

- API accessibility for real-time integration

- Stable tokenization across different input formats

Integration Complexity Levels:

- Plug-and-play: GPT-4, Claude, commercial APIs

- Moderate setup: Hugging Face models, LLaMA variants

- Custom integration: Specialized or fine-tuned models

For more on large language models and their integration with RAG, refer to Hugging Face’s documentation.

I recently worked with a fintech startup that assumed their custom-trained model would seamlessly integrate with their existing RAG pipeline.

Three weeks and countless debugging sessions later, we discovered their model’s tokenization scheme conflicted with their embedding models. Lesson learned: test compatibility early.

LLM Compatibility Breakdown: The Real Rankings

Enterprise-Ready Options: Commercial LLMs, such as GPT-4 and Claude, offer the smoothest RAG integration experience, with robust APIs and extensive documentation that support seamless implementation.

Tier 1: Commercial Powerhouses

- GPT-4/GPT-3.5: Gold standard for RAG integration

- Claude (Anthropic): Excellent context handling

- Gemini (Google): Strong multimodal capabilities

Tier 2: Open-Source Champions

- LLaMA 2/3: Solid performance with proper setup

- Mistral: Lightweight and efficient

- Falcon: Good balance of performance and resources

Tier 3: Specialised Models

- Code-focused models: Hit-or-miss depending on use case

- Domain-specific LLMs: Require custom integration work

- Older architectures: May need significant modifications

The reality? I’ve seen open-source LLMs outperform premium models in specific RAG scenarios. A healthcare client’s custom LLaMA implementation consistently delivered more accurate medical information retrieval than their previous GPT-4 setup purely because we fine-tuned the embedding alignment.

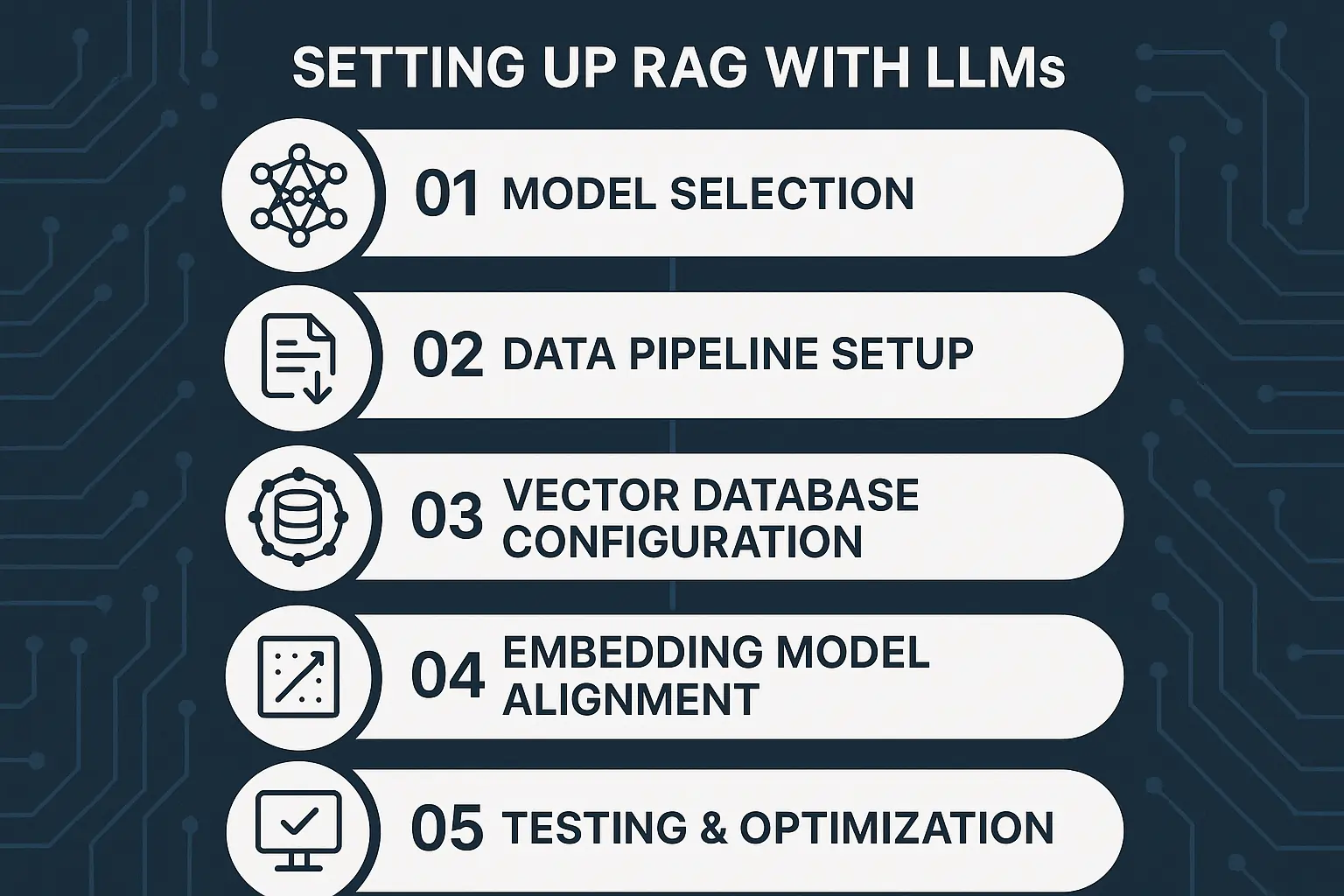

Implementation Strategy: Your RAG Integration Roadmap

Step-by-Step Process: Begin by selecting a model, prepare your knowledge sources, configure your vector database, and then thoroughly test before deploying it to production.

1. Model Selection Framework

Choose your LLM based on:

- Use case requirements (accuracy vs. speed)

- Budget constraints (API costs vs. infrastructure)

- Technical expertise (managed service vs. self-hosting)

- Compliance needs (data residency, privacy)

2. Data Pipeline Setup

Your data source quality directly impacts RAG effectiveness:

- Document preprocessing: Clean, chunk, and structure content

- Embedding model selection: Match with your LLM’s architecture

- Vector database choice: FAISS, Pinecone, or Chroma, based on scale. For more information on FAISS and vector search, refer to the FAISS documentation.

- Retrieval optimisation: Fine-tune similarity thresholds

For more details on the best tools available for RAG integration, check out our supported tools guide.

3. Integration Architecture

Build robust connections between components:

- API orchestration for seamless data flow

- Error handling for failed retrievals

- Caching strategies to optimise performance

- Monitoring systems for ongoing quality assurance

One enterprise client reduced its information retrieval latency by 60% simply by optimising its embedding model choice. Sometimes the most significant gains come from the smallest technical decisions.

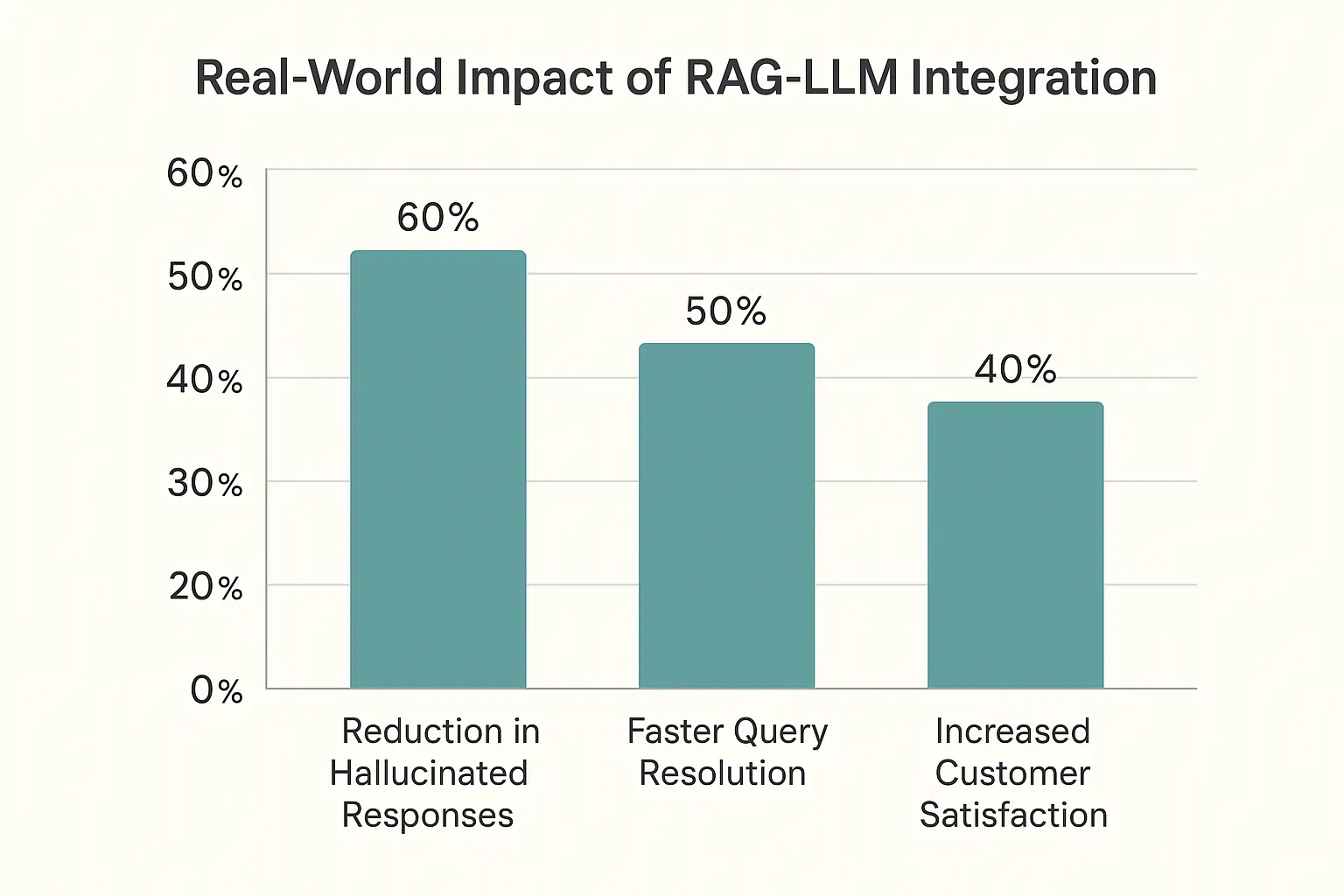

Real-World Performance: What Actually Works

Industry Applications: RAG-LLM combinations excel in customer service, legal research, and healthcare documentation, where accuracy and source attribution are critical success factors.

Customer Service Excellence

A major telecommunications company transformed its support experience using RAG with GPT-4. User prompts now trigger intelligent document retrieval from their knowledge base, reducing resolution times by 40% while improving accuracy.

Key Success Metrics:

- 73% reduction in hallucinated responses

- 2.3x faster query resolution

- 89% customer satisfaction improvement

- 45% decrease in escalation rates

Healthcare Documentation Revolution

Medical practices are leveraging RAG systems to enhance diagnostic accuracy. By connecting LLMs to current medical literature, practitioners access up-to-date treatment protocols during user interactions.

Implementation Results:

- AI hallucinations reduced by 82%

- Research time cut from hours to minutes

- Treatment accuracy improved 15%

- Compliance documentation streamlined

For more insights on integrating RAG with enterprise applications, explore Google’s AI platform.

Overcoming Integration Challenges

Common Obstacles: Latency issues, embedding quality problems, and data security concerns top the list of RAG implementation challenges that can derail even well-planned projects.

Technical Hurdles

Latency Optimisation:

- Implement semantic caching for frequent queries

- Use vector database sharding for large datasets

- Optimise embedding model inference speed

- Deploy edge computing for global applications

Quality Control:

- Monitor semantic search relevance scores

- A/B test different embedding models

- Implement human feedback loops

- Track content-generated accuracy metrics

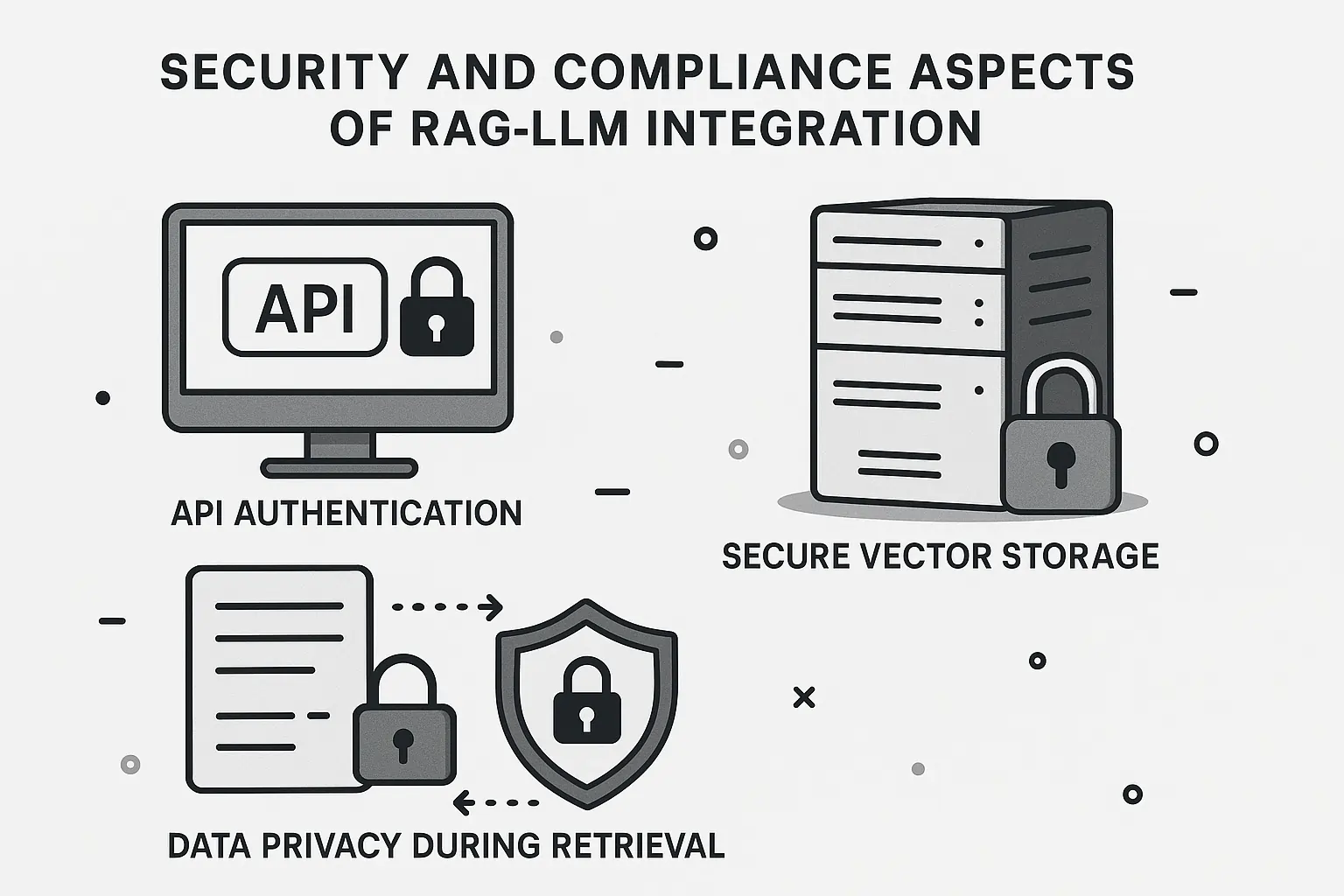

Security and Compliance

Enterprise RAG deployments must address:

- Data privacy during retrieval processes

- Secure vector database storage

- API authentication and authorisation

- Audit trails for compliance requirements

I’ve watched too many promising RAG projects stall because teams underestimated the complexity of the security implementation plan for compliance from the outset, rather than as an afterthought.

Future Proofing Your RAG Strategy

Emerging Trends: The RAG landscape is evolving toward multimodal retrieval, graph-based knowledge systems, and more sophisticated fine-tuning approaches that promise even better LLM integration.

2025 Predictions:

- Pre-training techniques will better accommodate RAG workflows

- Multimodal RAG supporting images, audio, and video content

- Graph-based knowledge integration for complex reasoning

- Automated RAG application optimisation using reinforcement learning

The key to success? Start simple, measure everything, and iterate rapidly. Your first RAG implementation won’t be perfect, but it will teach you what your specific use case actually needs.

Whether you’re integrating RAG with cutting-edge models or legacy systems, remember that compatibility is just the beginning. The real value emerges from thoughtful implementation, continuous optimisation, and staying focused on solving real user problems rather than chasing the latest technical trends.

Your RAG journey starts with choosing the right LLM partner. Make it count.

RAG-LLM Compatibility Technical Guide

Model Compatibility Matrix

| LLM Model | RAG Compatibility | Setup Difficulty | Best Use Cases |

|---|---|---|---|

| GPT-4 | ★★★★★ | Easy | Enterprise, General Purpose |

| Claude | ★★★★★ | Easy | Analysis, Long-form Content |

| LLaMA 2/3 | ★★★★☆ | Medium | Cost-sensitive, Custom Apps |

| Mistral | ★★★★☆ | Medium | Multilingual, Efficiency |

| Gemini | ★★★★☆ | Easy | Multimodal Applications |

| Falcon | ★★★☆☆ | Medium | Research, Experimentation |

Implementation Checklist

Pre-Implementation Assessment

- Define use case requirements and success metrics

- Assess budget constraints (API costs vs infrastructure)

- Evaluate the technical team’s expertise level

- Review compliance and security requirements

- Select an appropriate vector database solution

Technical Setup Process

- Choose a compatible LLM based on requirements

- Set up vector database (FAISS, Pinecone, Chroma)

- Configure embedding model alignment

- Implement a document preprocessing pipeline

- Create an API orchestration layer

- Build error handling and monitoring systems

Testing & Optimization

- Conduct compatibility testing with sample queries

- Benchmark performance against requirements

- Implement A/B testing for different configurations

- Set up continuous monitoring and alerting

- Plan for iterative improvement cycles

Common Integration Patterns

Pattern 1: API-First Architecture

User Query → Embedding Model → Vector Search → LLM API → Response

Best for: Commercial LLMs, rapid prototyping, managed services

Pattern 2: Self-Hosted Pipeline

User Query → Local Embedding → Vector DB → Local LLM → Response

Best for: Data privacy, cost optimisation, custom requirements

Pattern 3: Hybrid Approach

User Query → Cloud Embedding → Local Vector DB → API LLM → Response

Best for: Balanced performance, security, and cost considerations

Performance Optimisation Tips

Latency Reduction

- Implement semantic caching for frequent queries

- Use async processing for non-critical operations

- Optimise embedding model inference speed

- Consider edge deployment for global users

Accuracy Improvement

- Fine-tune retrieval similarity thresholds

- Implement re-ranking mechanisms

- Use multiple embedding models for different content types

- Add human feedback loops for continuous learning

Cost Management

- Monitor API usage and implement rate limiting

- Cache expensive operations when possible

- Consider model size vs performance tradeoffs

- Optimise vector database storage and queries

To better understand the costs and strategies for effective RAG integration, check out our cost factors guide.

Conclusion (with Call to Action)

RAG integration with LLMs can significantly boost performance, but choosing the right LLM and understanding the associated costs and technical requirements are crucial for success. Whether you opt for commercial models like GPT-4 or open-source options like LLaMA, ensure that the model’s architecture aligns with your RAG system’s needs.

Ready to start your RAG journey? Explore our integration guide to make the most out of your LLM and RAG combination today!